Linux from Novice to Professional Part 2 - Application Level.

After I finish the learning for my LPIC2 201 objective, The COVID-19 epidemic came to my country and test centers were closed so I couldn’t take the test, at least I got a refund as scheduled for the test date. What is happening right now is that I cannot take the first LPIC2 exam, and because I am very energized to learn to Linux and get to know all the cyber and hacking issues associated with it, I am writing this post. In the current post unlike its predecessor, I am going to write about every components, tools and knowledge, for inform you what you need to know for exam 202, in addition to focusing on the hacking issues that are hiding in the same tools that we are going to note here. This is how this article will be much more interesting and attractive for OSCP studies that I will do later. You can of course send me a message if there is a question or comment.

Objective 201-450

Chapter 0

Topic 207: Domain Name Server.

If we going to take about DNS, there is things that we need to be familiar before we start to talk about BING which is DNS system that can be run local on our network and provides information for our host. DNS is short for Domain Name Service, all the PC’s and machines like printers or scanner’s need IP address for communicate on the local network, there is sort of hierarchy in the networking world for allowing communication, but for people to remember numbers is difficult so instead we can use name convention, the DNS server is actually convert the name to IP or reverse it so the communication can be accomplish although we use names and not IP addresses.

In the DNS world every privet network may contain DNS server, when a client want to get some server online over the Internet, he run a query to the local DNS server to get the IP address for the name server the client what to connect, then the DNS server will respond with the DNS answer that contain that IP address and the client can create packets and send it to the destination by specefied the IP as the destination address and start the communication, in case the local DNS don’t know the IP address for that name, it will query the ISP DNS server, if the ISP dosn’t know about that name is make query to another DNS server that may be the Domain Nameservice like “GoDADDY” or other online nameservice. This hierarchy is done for prevents the client to sent query to the Nameservice, just imagine that every client over the world will query the name he search for over the public nameservice, this server will fall down because of the load.

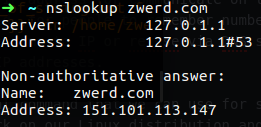

There is a several command that we can use for see the convert, the first one is nslookup this command can work on our Linux distribution and also on windows machine, we use it to find out what is the IP address for some domain name.

1

nslookup zwerd.com

By running that command I make a query to the DNS server to find out what is the IP address for the zwerd.com domain.

You can see that the server address (which is the DNS server) is our local machine 127.0.0.1, also you can see that this server is non-authoritative which mean that the local server has cache for that query but it not the authoritative server, in that case the cache is save for amount of time and after the time is run out it will run new query again to get that information.

We also can run just nslookup and it will bring the cli for it, so we can use option like set to set the query and change it for our needs.

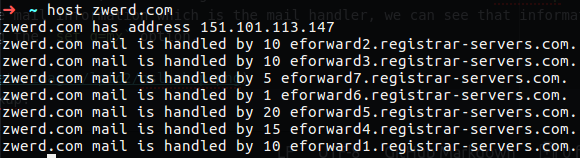

We can also use host command, this also give us the same information like nslookup does, but also it will give us the mail information which is the mail handler, we can see that information with nslookup by using the set q=mx option but by default the nslookup dosn’t show us the information.

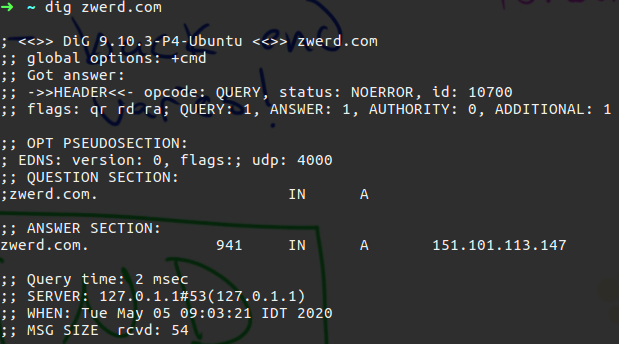

There is another tool that can bring us that information and it called dig, this command can also show us the query time and the cache time.

You can see on the answer section the cache time that is 941 second, which mean that if doring that time someone will trying to get the same query information it will get the information from our server without the server will query the ISP DNS, we also can see the Query time that is 2 msec and that the local server is 127.0.0.1, we can change that query for example to request google DNS server which is 8.8.8.8 by run the following:

1

dig @8.8.8.8 zwerd.com

This will give us the query information from google, we can also do that query via nslookup by using the server option for get the same information from google.

In the linux world we can us BIND for setting up DNS server, there is more DNS servers type that we need to know, the djbdns software package is a DNS implementation. It was created by Daniel J. Bernstein in response to his frustrations with repeated security holes in the widely used BIND DNS software. There is also the PowerDNS, this is a DNS server that developed by PowerDNS Community, Bert Hubert, it written in C++ and licensed under the GPL. It runs on most Unix derivatives. PowerDNS features a large number of different backends ranging from simple BIND style zonefiles to relational databases and load balancing/failover algorithms. Also there is the dnsmasq which is free software providing DNS caching, DHCP server, router advertisement and network boot features and it intended for small computer networks. This was developed by Simon Kelley and release on 2001, it also Unix-like operation system and it has GPL license.

In the DNS world there is two type of DNS server, forwarding and caching, in the forwording the DNS get the query and try to get the information from the next step DNS which will be likly the ISP DNS and give the client the name information for his query, in the caching style the local DNS server will get the query from the client and trying to build up the cache information for the domain query by the client and this is include the MX information, the A record and all other information that related to this domain, this is done by query the root DNS server, and the register and also the nameserver for get this all information and store it locale.

Let’s install the bind on our machine, I am using ubuntu so I need to run the following:

1

sudo apt install bind9 bind9utils

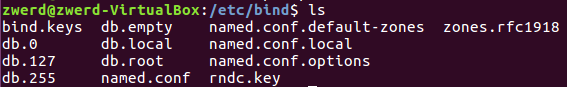

After we finish the installation we will see new folder on etc named bind, this folder contain the configuration file for our BIND server.

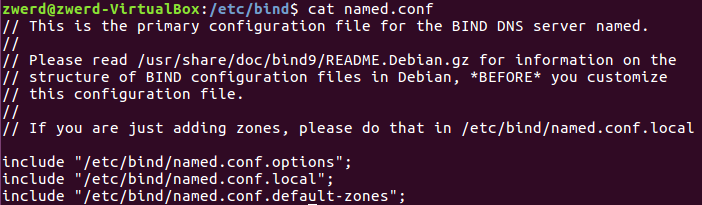

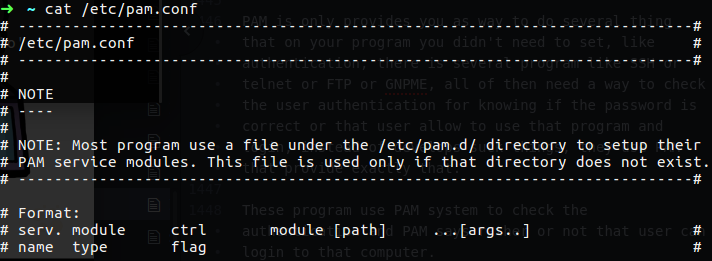

The file we want to look it is named.conf this file contain link files that going to be use for our server, you can see the other files that we may need options*, **local and default-zones.

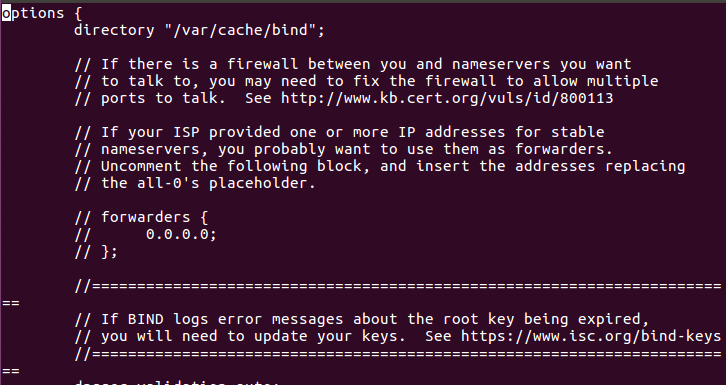

The options file contain the configuration for our server, we can setup the fowording or cache server with that file, right now we going to bring up a cache DNS server in that BIND system.

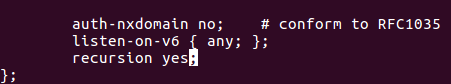

On the following image you can see that every line start with “//” this is a comment out line (this is C style for comment stuff) so those line not be use, you can see the forwording option, we can set there the IP address for the ISP DNS server which will make our server as forwording one.

Figure 5 The named.conf.options file.

Figure 5 The named.conf.options file.

We want to setup a cache server so we need to add more line that contain the following:

1

recursion yes;

Don’t forget to add the semi colon at the end of the line else it won’t be able to work.

Figure 6 The recursion line at the END.

Figure 6 The recursion line at the END.

Now what we need is to restart the BIND service.

1

sudo service bind9 restart

Now for check that it working we can run the following:

1

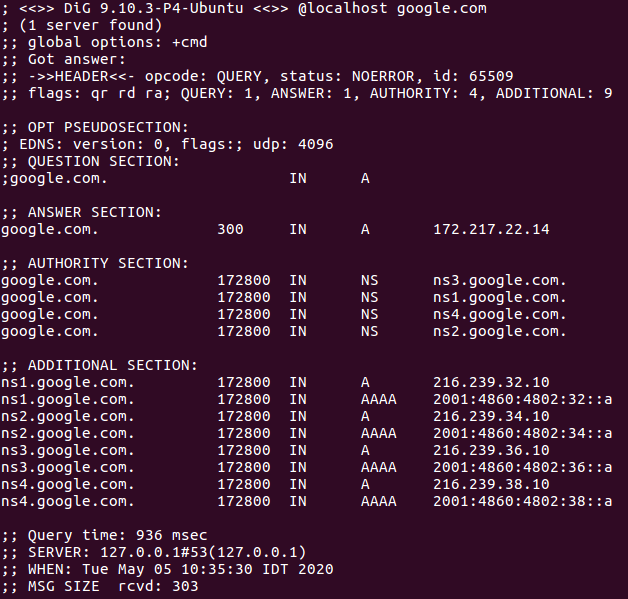

dig @localhost google.com

Figure 7 Using dig on localmachine to get google information.

Figure 7 Using dig on localmachine to get google information.

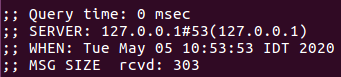

You can see that the query time was 936 ms and we also see that the cache time is 172800 seconds so in that case it caching up that query for 120 days, this is mean that if other machine on that network will query our server for google it will get the cache information that has beeing cache. If we will run dig again it will show us that the query time was very short because it cached.

If we have some domain name that we want to setup locally on our server we can do so, this will make the server to answer a query with what we setup. This type of local domain name in the bind world called zone, so we need to create zone file in order to make the server to answer the request with what we need.

Also we can setup a revers domain lookup, if we run the nslookup against IP address it can bring us the name for that domain, likewise we can use dig to do so, let’s create our own zone.

First we need to look at the local file, this file will point to the configure files for the zone we setup, so we need to setup the follow:

1

2

3

4

5

6

7

8

9

zone "zwerd" {

type master;

file "/etc/bind/db.zwerd";

};

zone "1.168.192.in-addr.arpa" {

type master;

file "/etc/bind/db.1.168.192";

};

The zone name for my case is zwerd, the type master mean to lookup for local database, this is mean the name for that domain can be found locally. The file say were the zone file will be located, please note that the file can be called what you like but I prefer to use the name conventions for that file.

the second list is for reverse domain backup so I specified the subnet for that domain in reverse way, so this is 1.168.192.

So now I need to create those two files, so I will run the following

1

2

sudo cp db.local db.zwerd

sudo cp db.127 db.1.168.192

So now I have the new file ready, we just need to make the changes for the query for the zwerd domain will get to answers.

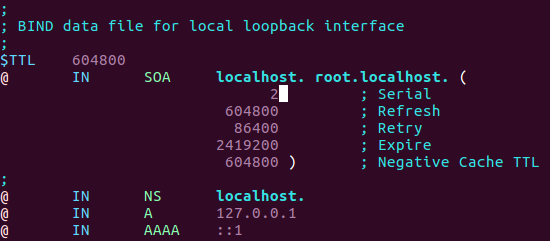

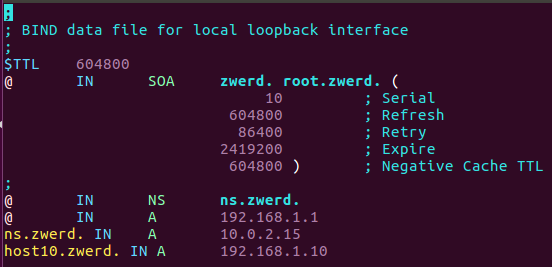

On that file you can see the SOA which is sort for Start of Authority record, this will contain the domain name and more information like the retry time and expire type, the serial number is used to make change, in our case the serial is 2 right now, after we done to change the file we must to increase that number for the changes take a place.

We also can see the NS, A and AAAA records, the AAAA records used for IPv6 so we can ride of that, the A record contain the IP address for that domain host, so after we done to change that file it can be something like as follow:

Figure 10 The db.zwerd file after changes.

Figure 10 The db.zwerd file after changes.

Please remember: in this file we can specefied www as A record to the server we want, in case the client try to dig www.zwerd. iformation it will get this www IP address, and this is how it work over the Internet.

You can see that I increase the serial number, I also change the SOA to contain the domain name, you can see the dot at the end which is must be specefied, if you dont understand that please watch the following.

You can see the name service and the IP address for zwerd domain, please note the @ at the begin of the line which specefied that this is related data to zwerd domain.

I also specefied the ns.zwerd. IP address and also some host named host10, if someone will try to ping that host he will get that IP address as an answer.

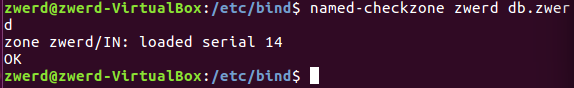

After we finish with the zone file we can run named-checkzone for verified that this file syntax are correct.

Figure 10-1 the

Figure 10-1 the named-checkzone util.

named-checkzone checks the syntax and integrity of a zone file. It performs the same checks as named does when loading a zone. This makes named-checkzone useful for checking zone files before configuring them into a name server.

We can also use the named-checkconf for checking the configuration file at once, for example by running named-checkconf -p, it will print on the screen all of the configuration file you have in bind, also the command named-checkconf -z to perform a test load of all master zones found in named.conf.

Now I need to create the revers file for that domain, so I need to change the db.1.168.192 file.

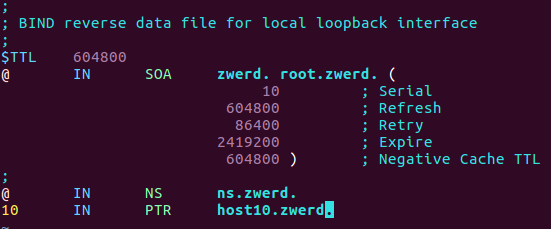

Figure 11 The db.1.168.192 file after changes.

Figure 11 The db.1.168.192 file after changes.

You can see that I also specified here the ns server which is local, so I used the @ for it, also the host10 which is 10 on my case.

Now we need to reload the rndc which going to reload the BING configuration file which is recommended to do instead to restart the Bbing9 service, because in that way we will lose the cache we have on the server, so rndc is what we need.

1

sudo rndc reload

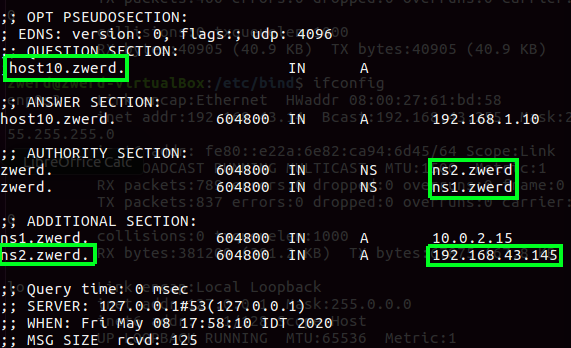

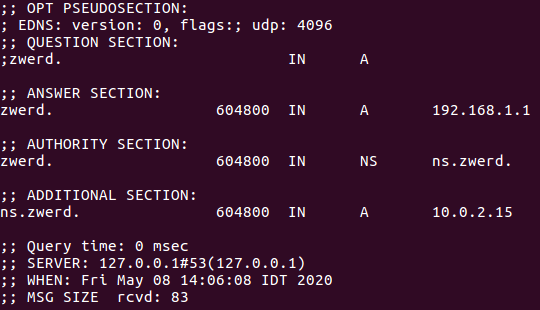

Now, if I run the dig against that zwerd domain it should work and show us that the zwerd address is 192.168.1.1, just note to run dig with @localhost to query from local machine.

Figure 12 Lookup for zwerd domain from local cache.

Figure 12 Lookup for zwerd domain from local cache.

You also can see the ns record which in 10 network, this is bacause this machine address is 10.0.2.15 and since I specefied the ns record to be local by using @ sing, this is the address we get from the cached.

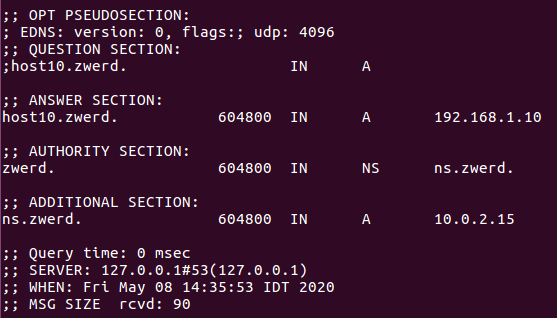

Now if I try to dig the host10 I should get the address we setup on the db.zwerd file.

1

dig @localhost host10.zwerd

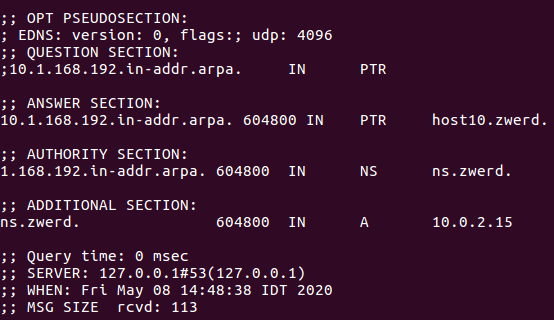

You can see that the address is 192.168.1.10 which is the record for host10 on our local cache. We can run reverse lookup to see if we setup that corectly, we just need to use the -x option for tell dig that it reverse query.

1

dig @localhost -x 192.168.1.10

Figure 14 Lookup for 192.168.1.10.

Figure 14 Lookup for 192.168.1.10.

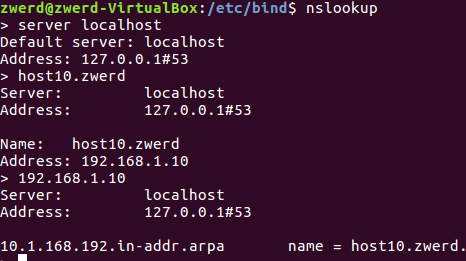

You can see that this is PTR which mean pointer for host10. I also run it on nslookup and change the server to be localhost, you can see that it give me the reverse address for it which is host10

Figure 15 using nslookup for 192.168.1.10.

Figure 15 using nslookup for 192.168.1.10.

Let’s talk about redundancy, if we have DNS server on our organization and that server failed we can setup another server to take a place in that case, on the bind we setup that as master and slave servers, on the master we specified the slave address for transfer the records for it, on the slave we need to set it to cache the record locally, so if the master failed the clients on our organization can send query to our slave.

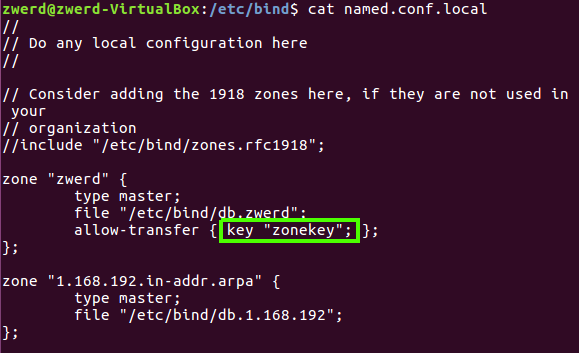

On the master server which is my Ubuntu we need to add more line on the named.conf.local and inside that we insert the follow under the zone “zwerd”:

1

allow-transfer { 192.168.43.145; };

So in my case the slave server address is 192.168.43.145 and this line will make our master to transfer that zwerd zone to the slave (if you want you can set this like as any for anyone can pull the master information), please remember that in my case the master address is 10.0.2.15.

Another change I made is in the db.zwerd file, I add up the new ns server which going to be 192.168.43.145 and named it as ns2.zwerd., also on the local name server I change it from ns to ns1.zwerd..

Also I add new line for NS record and called it ns2.zwerd., please remember that every change you made on this file, you must to update the serial number, after you done, just run the rndc reload.

Before you start to setup the slave it can be a good idea to check that you have communication allow trough the local firewall via port 53 which is used for DNS transformation.

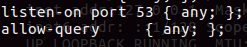

On the slave we need to change the configuration on option file, so I add the following lines:

This setting will allow our machine to listen port 53 for the query he will get, and allow any source to be answered with a query. If you didn’t set these the client query may never answered.

On the named.conf.local file I setup the following:

1

2

3

4

5

zone "zwerd" {

type slave;

masters { 10.0.2.15; };

file "db.zwerd";

};

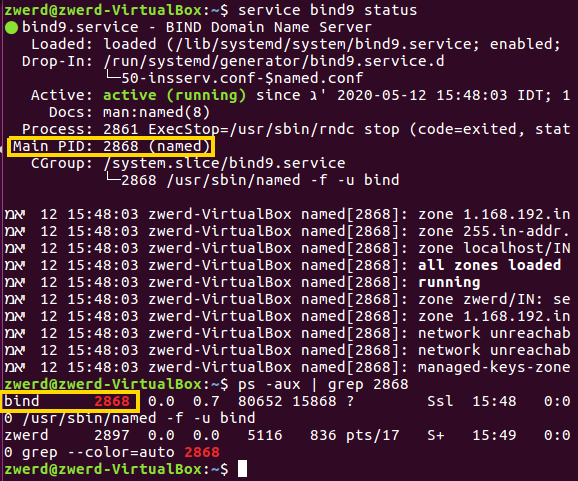

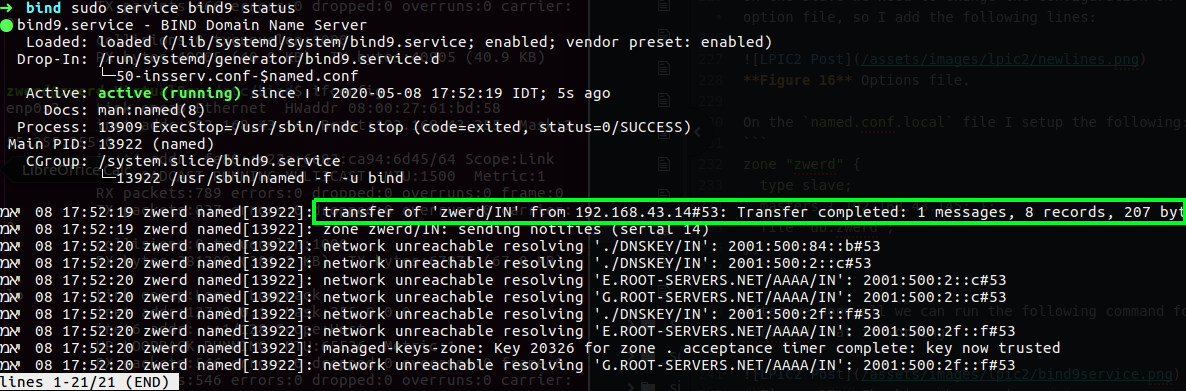

After we done all we can run the following command for verified that it working:

Figure 17 Checking the service status.

Figure 17 Checking the service status.

Please note: if you setup that on CentOS, you need make a changes on /etc/named.conf, also check that you not miss any default configuration like listen-on port 53. After you done, you must run the following command setsebool -P named_write_master_zones true, this command will create the following file /etc/sysconfig/named and enable out local machine to write zone database on our local machine, without that if you try to run dig or nslookup, you will never get the answer you expect.

Please note: for checking that it working correctly on CentOS we can find logs about the named service on the /var/named/data/named.run file.

You can see that I get new 8 records, so now I can just run new query to check if it working for me.

You can see that my query was success and I get the host10 address, also you can see the ns1 and ns2 which is the master and slave servers.

This was save on my cache, this is mean that if I stop the bind on the master I still be able to to get the information with my query because the data is cache locally.

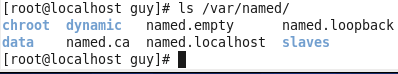

Please remember: on CentOS for install bind we need to run yum install bind and not bind9, also the configuration file is /etc/named.conf which contain all of the setup (not like ubuntu that all are sperated files, options, local and default-zones). We can find the /var/named/ folder that contain more information regarding the bind process, like dump file, statistics-file, and so on. Also remember that the service on CentOS called named instead of bind or bind9.

If for some reason you need to kill the bind process, you can check on the service status what is the PID, you can also search for bind on the ps -aux command:

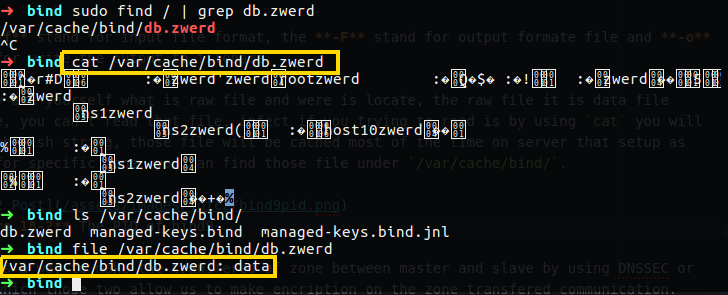

I also want to talk about some issue that sysadmin may have to deal with, this regarding to bind release, with the development of the BIND 9.9 branch, zone file storage for slaved zones has been changed to expect the raw zone format by default. BIND administrators testing 9.9 or preparing for migration from an earlier version have asked how to deal with this format change.

Several options are available, one of them is to use named-compilezone utility which is part of the BIND distribution (install by the bind9-utils we saw earlier), this tool can be used to convert zones from text to raw and from raw to text.

1

2

3

4

5

6

#/bin/bash

# convert raw zone file "example.net.raw", containing data for zone example.net, to text-format zone file "example.net.text"

named-compilezone -f raw -F text -o example.net.text example.net example.net.raw

# convert text format zone file "example.net.text", containing data for zone example.net, to raw zone file "example.net.raw"

named-compilezone -f text -F raw -o example.net.raw example.net example.net.text

The -f stand for input file format, the -F stand for output formate file and -o stand for write the output to filename.

You may ask yourself what is raw file and were is locate, the raw file it is data file formate, you can’t read that file, in fact if you trying to read is by using cat you will see gibberish string which is in our case is binary zone format, this file will be cached most of the time on server that setup as slave for specific zone, you can find those file under /var/cache/bind/.

Figure 18-2 You can see that this is raw file.

Figure 18-2 You can see that this is raw file.

Like named-compilezone utility that allow you to convert raw file to text, to can also control the formate of zone file that cache on your slave zone server, in the zone section on named.conf.local you need to add the line masterfile-format text;, this will make that slave to save the cached data in text format, for example:

1

2

3

4

5

6

7

zone "mydomain.com" in {

type slave;

notify no;

file "data/mydomain.com";

masterfile-format text;

masters { 10.100.200.10; };

};

Let’s talk again about transfered zone, there is a way to make the transfered zone between master and slave by using DNSSEC or TSIG, which those two allow us to make encryption on the zone transfered communication.

The different between those two is that TSIG just help us to encrypted the that transfer from master to slave, only the slave who has the key can decrypt the data and cache the zone’s he got. The DNSSEC is allow us to use encryption and also bring up mechanism to know if we can trust the master zone server.

In DNSSEC we need to setup on the master the privet key and on the slave we must have public key, when the master transfer the zones to the slave and the slave use the public key that he get from the registrar and use that to verified that the master is really the master server that give him the zone information and after verified he save the zone information.

In the TSIG we only encrypt the communication between the servers, there is shared key that both must have in order to start the encrypted communication, in this case the master can start the zones transfer only to DNS servers that contain the shared keys. This type of operation can assure us that the zones transfer communication are encrypted which mean nobody on the middle network can read that data, so let’s setup shared key for now.

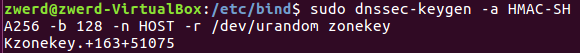

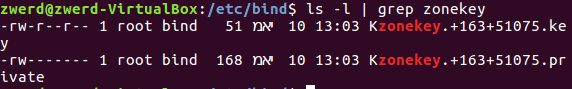

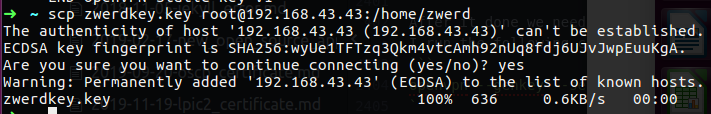

So on my Ubuntu I am going to use dnssec-keygen this tool going to create keys for me and can be use for DNSSEC type or TSIG, in correct example we setup a TSIG as follow:

1

sudo dnssec-keygen -a HMAC-SHA256 -b 128 -n HOST -r /dev/urandom zonekeys

In that command we using -a for algorithm which in my case I choose to use sha256, the -b option stand for bit size, so I set it to 128, the -n is the nametype which mean that we can choose to use DNSSEC or TSIG, if we set that for a KEY this mean that this is DNSSEC type, but by using HOST it use the shared key which is the TSIG, the -r option is for urandom this the location for saving random information, and then we specefied the name of the key which is zonekey in my case.

Please note: In order to generate SECURE keys, dnssec-keygen reads /dev/random, which will block until there’s enough entropy available on your system. Some systems have very little entropy and thus dnssec-keygen may take forever, to solve this I use -r /dev/urandom, which is “non-blocking” pseudo-random device (lower security). credit Alex.

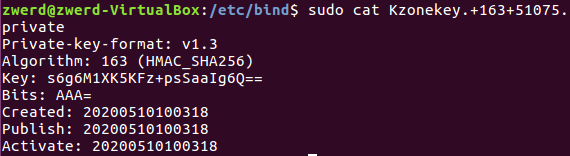

You can see that it generate the key, but actually we have now two files, the privet file and the key file, which are both contain the key, in my case it doesn’t really matter because we generate TSIG and not DNSSEC base type.

Figure 20 The key which contain the name I setup.

Figure 20 The key which contain the name I setup.

You can see both of the files that contain some randome name with the name I specified, let’s look on the private file

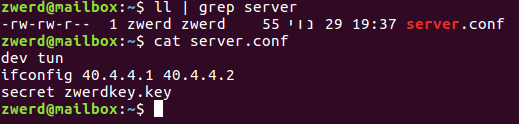

You can see that it’s specified the algorithm and the key sting, this string is what we need, on the master we need to do one more thing, create the key file that contain the key we generated and specified that file and key to be use for zone transfer.

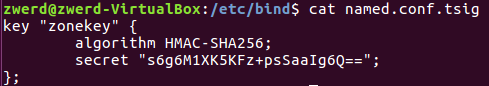

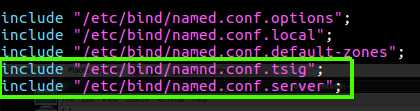

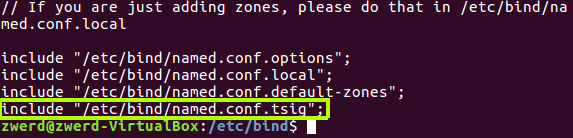

You can see that I specified the keyname and the algorithm and also the key itself, now I need to include that key inside the named.conf file.

Figure 23 Include the TSIG file.

Figure 23 Include the TSIG file.

After I done, I need to setup the named.conf.local for the transfered zone can be done only with share key instad of IP address as we saw earlier.

You can see that I specified the key “zonekey” which contain the key I want for this zone transfered to be used.

Now I need to reload rndc for reload the all new configuration files and used it without removing the cached data.

Please note: you can find the rndc on the /usr/sbin/rndc directory.

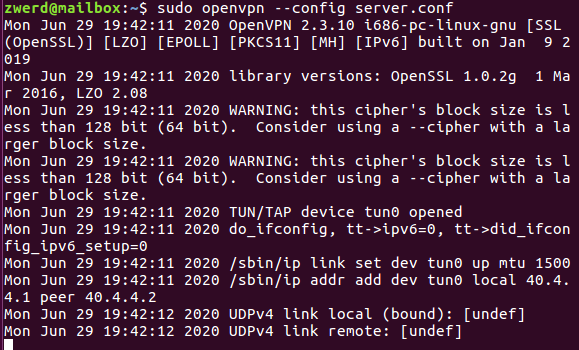

So we done all what we need on the master side, on the slave side we have more things to do, but before that let’s look on the stat of the service to check for errors.

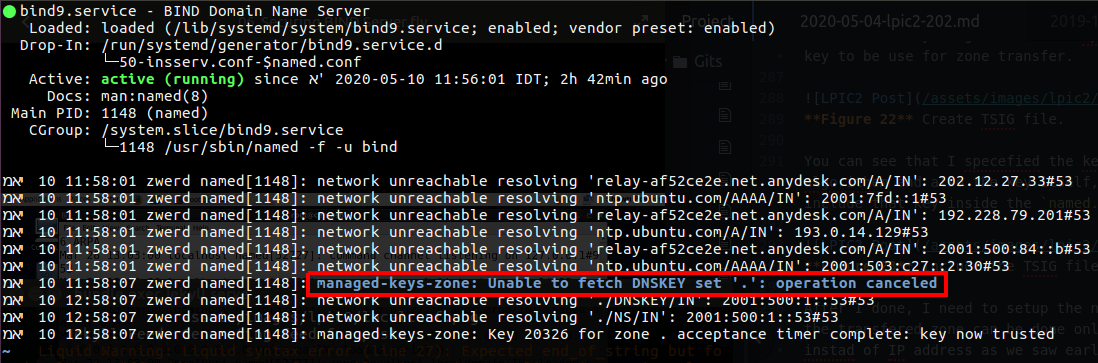

Figure 25 The bind9 service status.

Figure 25 The bind9 service status.

You can see that on the slave I unable to fatch the DNSSEC keys, so the operation is canceled, this is because I didn’t setup the key on the slave, in order to make it work I need to setup more things on the slave.

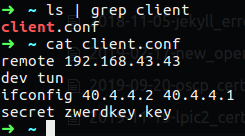

We need to create two files and setup the new configuration for make it work

The tsig file

1

2

3

4

key "zonekey" {

algorithm HMAC-SHA256;

secret "s6g6M1XK5KFz+psSaaIg6Q==";

};

The server file:

1

2

3

server 192.168.43.14 {

keys { zonekey; };

};

On the named.conf file I need to specefied those two file.

Now all I need is to restart the bind9 service and checking the status again.

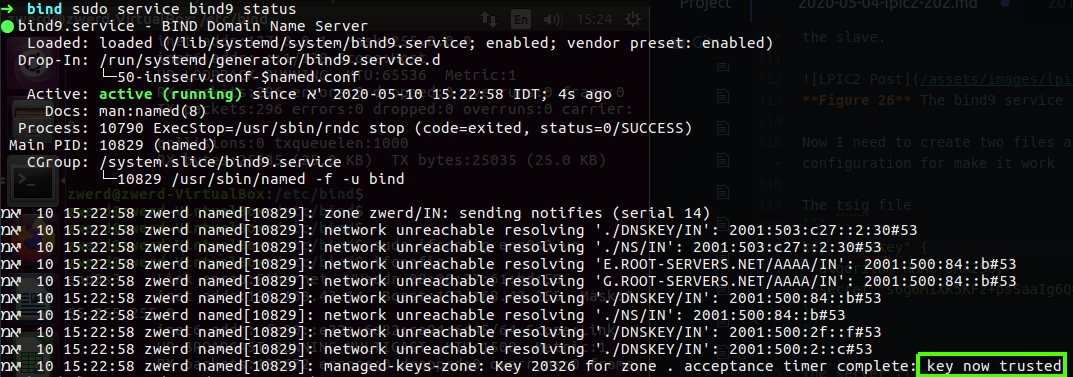

Figure 27 The service running and we have connection trusted.

Figure 27 The service running and we have connection trusted.

You can see that the key now trusted, this is mean that we have secure connection with the master and now if I run the dig for host10 I will find it.

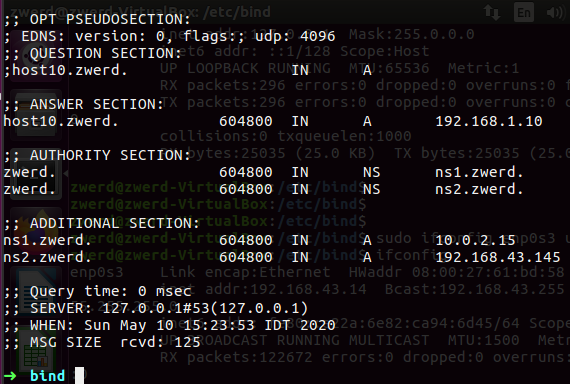

Figure 28 Can see the host10 using dig.

Figure 28 Can see the host10 using dig.

Please note: on CentOS those two file configuration will be on the named.conf and each in saperated section.

If we talk about DNSSEC that method, in that method we allow the other to verified that out master server is actually the trusted server, for doing so we need to create the Zone Sign Key (ZSK) and the Key Sign Key (KSK) you can read more about that on cloudflare, After you have those two keys (ZSK & KSK) you can sign the zone by using dnssec-signzone, you can read more about that on the following like apnic, you just need to know that for sign zone you need to use dnssec-signzone command.

Let’s say that on our organization we want to force our client that if they trying to get the home page of the organization they going to hit the local home page server and not the public site on the internet, in that case we need to create some master zone file on the local DNS for this kind of queries for bring them other IP address that different from the public site.

What will append is that when some one query for this site will send up to the local DNS server, then the local DNS will bring this information from it’s local zone file and will not query the ISP DNS server for that information, this will force the clieant to connect the local site of out organization.

For set this we need to add on the named.conf.local new zone section that set as type of master, this will declering that this server is the master server for our new zone, then we need to go and create new file named db.<zonename> and adding that zone information inside that site.

All we need to do after that is reload the rndc or restart bind9 service.

Now, there is some issue that we need to be aware of is that if someone tries to take advantage that service he can get the root directory and do unwanted stuff to our system files, so in that case we need to isolate the bind process and its children from the rest of the system.

To do so we can use chroot jail, this tool do just that and isolate the process and it’s children from the rest of the system. It should only be used for processes that don’t run as root, as root users can break out of the jail very easily.

The idea is that you create a directory tree where you copy or link in all the system files needed for a process to run. You then use the chroot() system call to change the root directory to be at the base of this new tree and start the process running in that chroot’d environment. Since it can’t actually reference paths outside the modified root, it can’t perform operations (read/write etc.) maliciously on those locations. (credit Ben Combee)

First of all let’s look how it done on CentOS, we need to install bind service check that it running and then install bind-chroot.

So first of all I install the bind on my CentOS system.

1

yum install bind

Second, I start named service and checked that is working right.

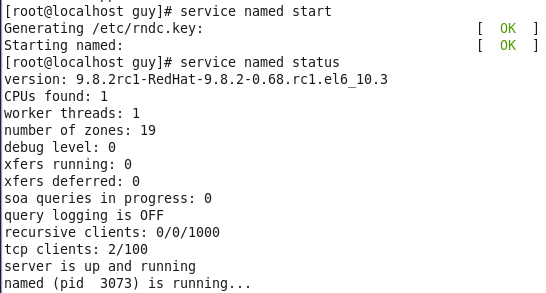

Figure 29 The bind service on CentOS.

Figure 29 The bind service on CentOS.

You can see that the service is started successfully and the bind service is up and running, now Let’s look on the files location.

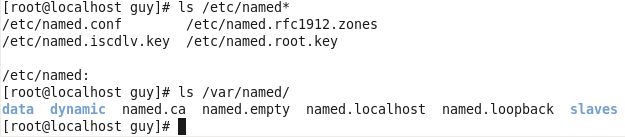

Figure 30 The bind file on CentOS.

Figure 30 The bind file on CentOS.

We can see that we have file on /etc and also on /var/named directories, now it’s time to install bind-chroot and see what it make on our directory.

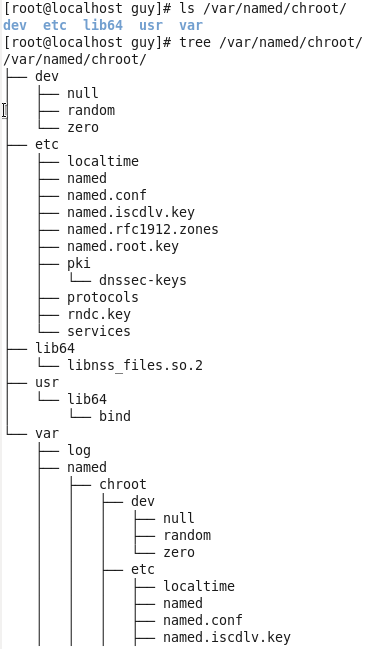

You can see that we now have the chroot folder, this folder contain sort of new system enviroment that contain the bind files.

Figure 32 Using the tree command for path tree.

Figure 32 Using the tree command for path tree.

You can see that we have dev, etc, usr and var directories, and every such directory contain files that related to bind, so this force the bind to look on the chroot directory which is isn’t the actually root directory of that system, so this is the jail for bind which is separate that process from the root look system.

On Ubuntu we have what is called App Armor, this App Armor is a Mandatory Access Control (MAC) system which is a kernel (LSM) enhancement to confine programs to a limited set of resources. So we actually not need to worry about bind process, but if we want we can change the directory for Ubuntu to be in jail, it’s more complicated to run bind process on the jail, there is no tool bind-chroot for that so you have two option.

Remove BIND, create the jail root directory and apply their the source code of bind and configure it.

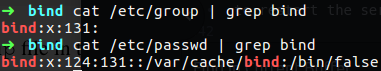

Create jail root directory, under the jail directory we need to create enviroment like we saw on CentOS example, also do some reaserch about BIND files and move or copy them to the jail sub directories, as example, we have two file that contain information about bind, that is the

passwdandgroup, those two file must to be under the jail root directory in the/etc/folder.

Figure 33 BIND passwd and group information.

Figure 33 BIND passwd and group information.

There is more files that related to bind like /etc/ld.so.cache and /etc/localtime, so it important to do your research for make jail to bind on your Ubuntu, after that on the /etc/default/bind9 file we need to change the option and add the following: -u bind -t /var/named/<jail_root_name> this going to force the the process to use top level domain (-t option) we setup and use it for the all bind process, all we need now is to restart the service.

Please remember: you don’t have to set the jail on Ubuntu because of the App Armor that do the same purpose in other ways, but you can make a jail and run for one of the two option we saw, you can use that source to apply that.

The following section lines reading are from Snow B.V. book, about DANE.

After having set up DNSSEC for a certain domain, it is possible to make use of DNS Authenticated Named Entities: DANE. In order to understand the advantage of DANE, we need to look at the problem DANE is trying to solve. And this problem involves Certificate Authorities, or CA’s in short.

The problem with CA-dependant encryption solutions lies in the implementation. When visiting a SSL/TLS Encrypted website via HTTPS, the browser software will gladly accept ANY certificate that uses a matching CN value AND is considered as being issued by a valid Certificate Authority. The CN value is dependent on DNS, and luckily we just set up DNSSEC to have some assurence regarding that. But, modern browsers come with over 1000 trusted root certificates. Every certificate being presented to the browser by a webserver, will be validated using these root certificates. If the presented certificate can be correlated to one of the root certificates, the science matches and the browser will not complain. And that’s a problem.

This is where DANE comes in; While depending on the assurance provided by DNSSEC, DNS records are provided with certificate associating information. This mitigates the dependency regarding static root certificates for certain types of SSL/TLS connections. These records have come to be known as TLSA records. As with HTTPS, DANE should be implemented either correctly or better yet not at all. When implemented correctly, DANE will provide added integrity regarding the certificates being used for encryption. By doing so, the confidentiality aspect of the session gets a boost as well. The TLSA Resource Record syntax is described in RFC 6698 sections 2 and 7. An example of a SHA-256 hashed association of a PKIX CA Certificate taken from RFC 6698 looks as follows:

1

2

3

_443._tcp.www.example.com. IN TLSA (

0 0 1 d2abde240d7cd3ee6b4b28c54df034b9

7983a1d16e8a410e4561cb106618e971 )

Each TLSA Resource Record (RR) specifies the following fields in order to create the certificate association: Certificate Usage, Selector, Matching Type and Certificate Association Data.

The Certificate Association Data Field in the example above is represented by the SHA-256 hash string value. The contents of this Data Field are dependent on the values preceding from the previous three RR fields. These three fields are represented by ’0 0 1’ in the example above. We will explain these fields in reverse order. This makes sense if you look at the hash value and then read the values from right to left as ’1 0 0’.

The value ’1’ in the third field from the example above represents the Matching Type field. This field can have a value between ’0’ and ’2’. It specifies whether the Certification Association Data field contents are NOT hashed (value 0), hashed using SHA-256 (value 1) or hashed using SHA-512 (value 2). In the example above, the contents of the Certificate Association Data field represent a SHA-256 hash string.

The second field represented by a ’0’ represents the Selector Field. The TLSA Selectors are represented by either a ’0’ or a ’1’. A field value of ’0’ indicates that the Certificate Association Data field contents are based on a full certificate. A value of ’1’ indicates that the contents of the Certificate Association Data Field are based on the Public Key of a certificate. In the example above, the Selector field indicates that the SHA-256 hash string from the Certificate Association Data field is based on a full certificate.

The first field represented by a ’0’ in the example above represents the Certificate Usage field. This field may hold a value between ’0’ and ’3’. A value of ’0’ (PKIX-TA) specifies that the Certificate Association Data field value is related to a public Certificate Authority from the X.509 tree. A value of ’1’ (PKIX-EE) specifies that the Certificate Association Data field value is related to the certificate on the endpoint you are connecting to, using X.509 validation. A value of ’2’ (DANE-TA) specifies that the Certificate Association Data field value is related to a private CA from the X.509 tree. And a value of ’3’ (DANE-EE) specifies that the Certificate Association Data field value is related to the certificate on the endpoint you are connecting to.

In the example above, the Certificate Usage field indicates that the certificate the SHA-256 string is based on, belongs to a public Certificate Authority from the X.509 tree. Those are the same Certificate authorities that your browser uses. Field values of ’0’, ’1’ or ’2’ still depend on these CA root certificates. The true benefit of TLSA records gets unleashed when DNSSEC is properly configured and using a value of ’3’ as a value for the Certificate Usage Field. Looking back at the very start of the TLSA Resource Record example above, the syntax clearly follows the port._proto col.subject syntax. The TLSA Resource Record always starts with an underscore ’’. Then the service port is defined (443 for HTTPS) and a transport protocol is specified. This can be either tcp, udp, or sctp according to RFC 6698. When generating TLSA records, it is also possible to specify dccp as a transport protocol. RFC 6698 does not explicitly mention dccp though. The subject field usually equals the servername and should match the CN value of the certificate.

It is not mandatory for the LPIC-2 exam to know all these details by heart. But, it is good to have an understanding about the different options and their impact. Just as using a value of ’3’ as the Certificate Usage can have some security advantages, a value of ’0’ as the Matching Type field can result in fragmented DNS replies when complete certificates end up in TLSA Resource Records. The success of security comes with usability.

Chapter 1

Topic 208: HTTP Services.

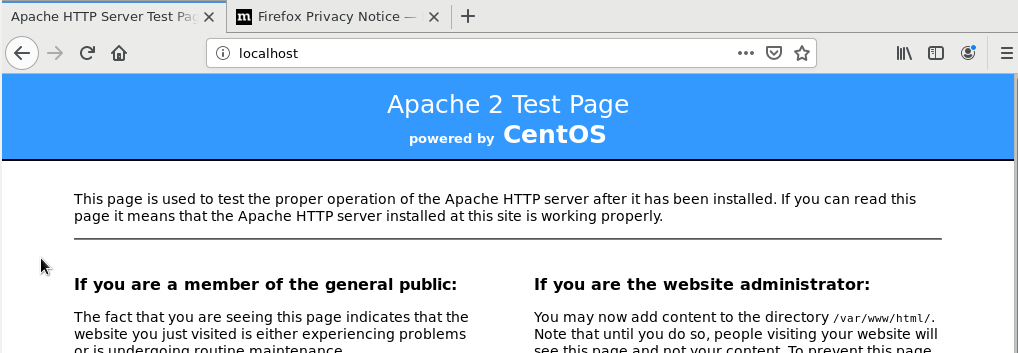

So in the world of web atop Linux we can use apache server to bring up web server or web page to be available online, first of all it call apache2 for version 2 (in my case version 2.4.18) but I don’t think that is going to change it apache version 3 will release out if any, just like bind9.

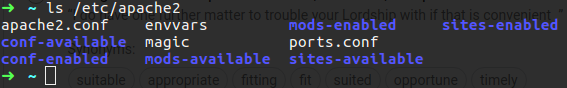

Now, there is some different between apache2 on Ubuntu and CentOS, on Ubuntu it called apache2 which is convenient because all the stuff that related to it also called apache2, the service is apache2, the folder for loading the configuration file are /etc/apache2/, so it make it very easy to track all the file that related to apache2 on Ubuntu, In CentOS it called httpd, so the service is httpd, the configuration folder are locate at /etc/httpd/ and so on, so remember that diffrets between the distributions.

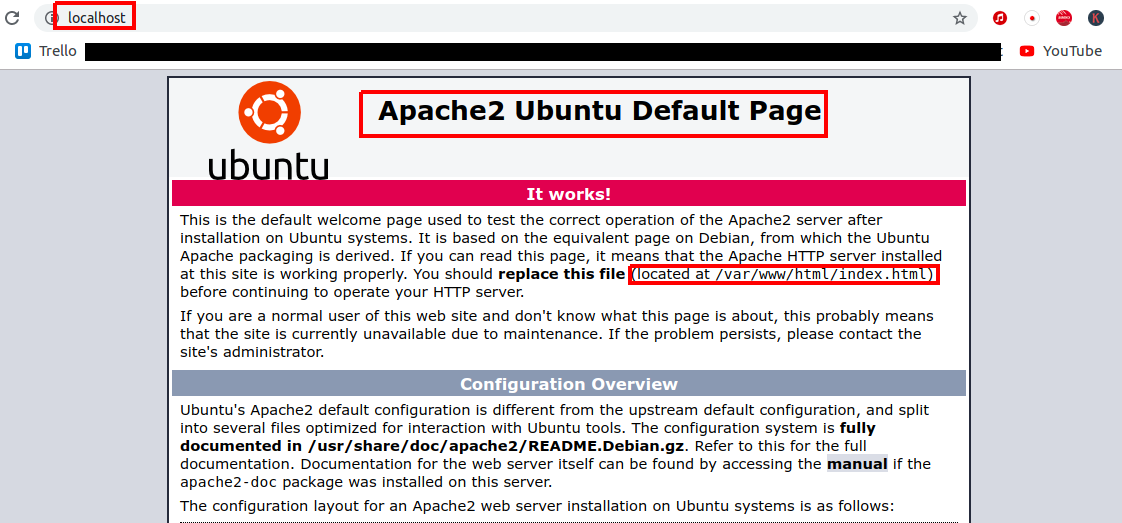

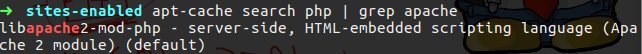

So let’s look on Ubuntu how it’s done, first you need to install it, by run sudo apt install apache2, now here’s a tip, if you don’t know the package name you can run apt-cache search <pkg name> and this will print you all the package available related to your dependencies, you can also run grep on that to cut off what you need or expect to see.

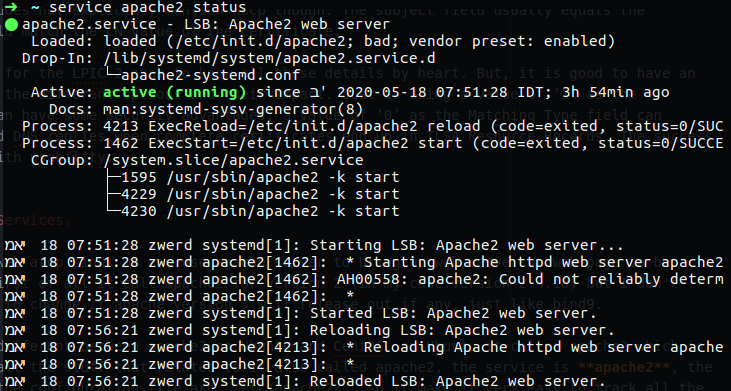

After you install apache2 you can start it by run service apache2 start and check it’s status.

Figure 34 apache2 service status.

Figure 34 apache2 service status.

In my case you can see that the service is active and running, you also can run apache2 -v which bring you the version, what you may what to see is the configuration file that can be found under /etc/apache2.

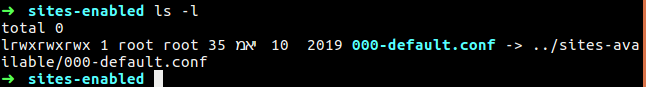

We have two folder that called site-available and site-enable, if you going to site-enable folder you will be found some symbolic link to some conf file under site-available, the architecture is built this way so that we can easily enable or disable configuration files from the service.

Figure 36 The symbolic link to the config file.

Figure 36 The symbolic link to the config file.

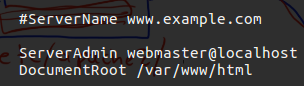

If you look on the 000-default.conf you will find line of documentroot which mean this is the directory to load the webpage and render it, by rendering it I mean that the apache2 server take any of HTML file in that folder and rendering it in the way that we can see the page on the browser and view the context without the HTML tags.

You also can see that the server is localhost, so on the browser we can just type in the URL field the localhost and that will bring us the apache2 default page.

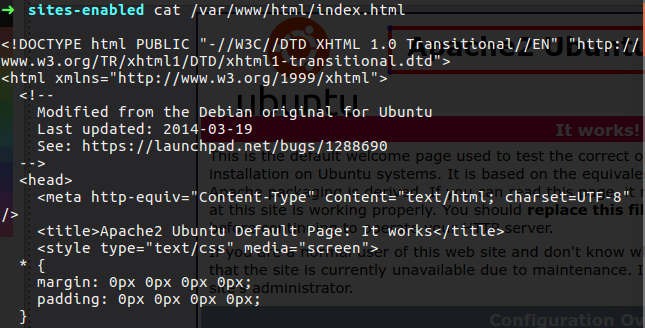

You can see that say that the default page is found under /var/www/html/ ehich is what we saw earlier, if we look on the actual file before the apache render it you can see that it contain the HTML tags.

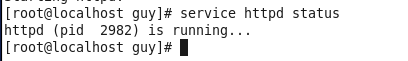

Let’s look on the pache2 on CentOS, remember that it called httpd service so the same is the status command.

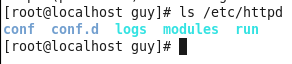

So the files of that httpd service like locate on /etc/httpd, this folder contain the configuration like in apache2 on Ubuntu, please remember that this is the same thing, this is apache2 on CentOS and this is all the differences.

Figure 41 The files in httpd folder.

Figure 41 The files in httpd folder.

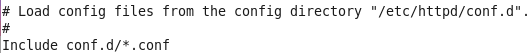

The folder that contain the configuration file is the conf.d, this folder is like the one we saw on ubuntu that contain symbolic link to other files, in the CentOS all the files are locate at conf.d folder, you can also look at /etc/httpd/conf/httpd.conf file that contain the include line that tell the apache to load all the file under conf.d folder that ends with .conf string.

Figure 42 The include line for conf files.

Figure 42 The include line for conf files.

So in the CentOS file if we want to disable some conf file we can just change the extention to be something else and it will not load that config file.

Figure 42-1 The files in httpd folder.

Figure 42-1 The files in httpd folder.

so let’s say I change the welcome.conf to welcome.conf.disable, this will make the apache2 to not load that welcome config file.

If for some reason you can’t change the main configuration for file of apache2 you can use the .htaccess for making your changes, that file contain the subset for apache directives, there is referance in the apache2.conf file as example you can find the line AccessFileName .htaccess which is use for name of the file to look for in each directory for additional configuration directives.

Let’s talk about apache modules processes, we have the way to install some module that can be for example SQL, PHP, PERL ect. every such module can bring us ability that we didn’t have before, just remeber that so far we saw that the apache render some HTML code to be more readable in user browser, but he didn’t know how to manage and read more languages, for example if we bring some PHP code, in the regular way apache don’t know how to render it so it will print out the code on the document, but if we install apache module that support PHP, then the apache will know how to render the PHP code and display it on our browser.

So, on Ubuntu just install the module you need, if it is PHP search for PHP mod and install it

Figure 43 The PHP mod that I need.

Figure 43 The PHP mod that I need.

Just run the following command:

1

sudo apt install libapache2-mod-php

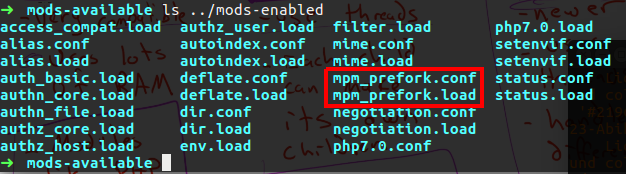

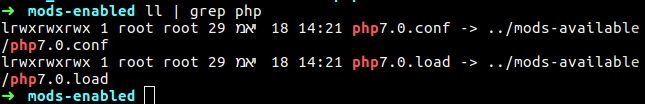

After the installation will done you will found two new files on the mods-available folder.

1

2

php7.0.conf

php7.0.load

In the mods-enabled you will see new symbolic link to the available folder that load the configuration of PHP to our apache.

Figure 43 The symbolic links to PHP files.

Figure 43 The symbolic links to PHP files.

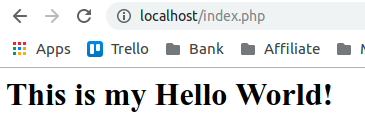

You can check PHP version by using php -v and also check if it active a2query -m php7.0, now you can create php code in html folder and see if the browser can read and render it.

1

2

3

4

5

#!/usr/lib/php

<?php

$text = "This is my Hello World!";

echo "<h1>$text</h1>";

?>

If it working you will see it.

Figure 44 PHP code on the browser.

Figure 44 PHP code on the browser.

In the apache world there a working method that called Multi-Processing Modules (MPMs), the way that work is that the apache server contain several processes to allow multiple clients to get information from the apache web server, every process that allow client to connect is a child process. The MPM divides into three main methods.

Prefork, this method is very comatible, which mean that you use it when you need it, for example if you are using the PHP modules you will need to use prefrok because the way it working and allow PHP to work good without some buggy issues, this method uses a lots of RAM so you need to be sure that you set it right for your server will never become out of memory and crash.

Worker, this method is the most used method, this is use threads, this is mean that every child process have a threads that allow clients to connect the web server, the worker need more memory for use.

Event, thismethod are newer one, it also use threads and it handles diffrent type of connection differently.

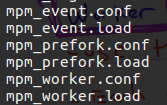

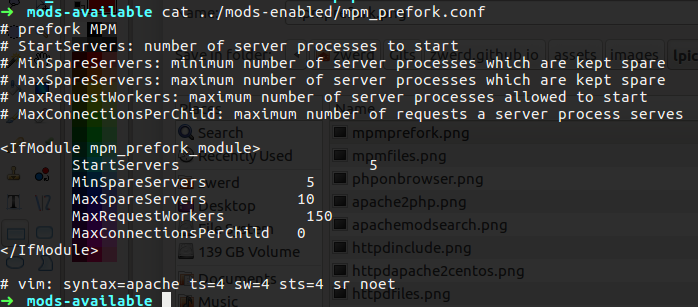

We need to get know to enable those method, we going to look at the prefork because I am using PHP and prefork used for that case.

Here on Ubuntu I jump to /etc/apache2/mods-available folder and check what files are in it.

You can see the MPM files, if we check on the enable folder we will see the symbolic link for working MPM method.

So now we know that prefork method are working, if we want we can change the setting of that file.

Figure 47 The prefork method settings.

Figure 47 The prefork method settings.

The start server is set to 5, this is mean that when the apache is running, the MPM start 5 child process for handle clients, the MamRequestWorkers mean that this is the maximum allowed connection to that web server, in my case it 150 connections at once, if all the connections close down my server will leave a number of open processes which is in my case between 5 and 10 which are the MinSpareServers and MaxSpareServers.

Please note that the MaxRequest set to handle client request the more request you allow the more memory need so if you set it to 1,000,000, your server can crash so note that you set it for a possible request numbers, if you need more, add up to your machine more memory for it can handle it.

We can setup authentication on our web server, this way will force the clients to type user name and password before that can get access to the website.

To do it we need to tell apache that authentication is required, next we need to create the credential.

If you remember in the sites-enable folder we will find the 000-default.conf file, this file contain the configuration for our server like we saw earlier the root folder is the /var/www/html directory, in that file we also going to setup the authentication, all we need to do is to add new section in that file as follow:

1

2

3

4

5

6

<Directory "/var/www/html/secret">

AuthType Basic

AuthName "Welcome! Type your credential"

AuthUserFile "/etc/apache2/userpass"

Require valid-user

</Directory>

Please note: this Directory section need to be place inside the VirtualHost of your web conf file.

The AuthType is the type of the authentication we going to use which is basic, there is also digest which is implements HTTP Digest Authentication (RFC2617), and provides an alternative to mod_auth_basic where the password is not transmitted as cleartext.

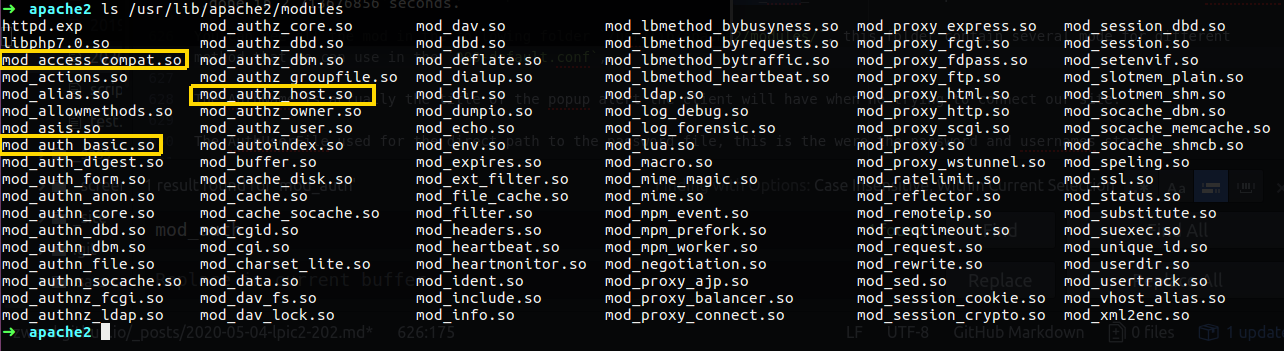

You can see all the mod in the following folder /usr/lib/apache2/modules/, this folder contain several modules for different method that we can use in the 000-default.conf, another example is mod_authz_host that can allow only specific source client to connect our server, like required IP address, or hostnmae etc, also the mod_access_compat that allow us to group authrizations based on hostname or IP address.

Figure 47-1 The modules we can use.

Figure 47-1 The modules we can use.

The AuthName is actually the title of the popup alert the client will have when he trying to connect our site.

The AuthUserFile used for the direct path to the password file, this is the were the password and usernames stored, we can use AuthGroupFile string if we want it to look at file that contain list of user groups for authorization.

The Require is say that only valid user will be allow to get that folder.

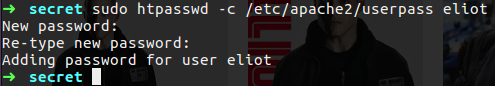

For create the password we need to run the htpasswd command as follow:

1

sudo htpasswd -c /etc/apache2/userpass eliot

This will ask from us the password for eliot user and in the end it create the userpass file on the apache2 folder that contain the password we typed.

If you will try to read that file you will found out the this is some ash string. Now we need to use apache2ctl, so run the following command.

1

sudo apache2ctl restart

This is the same like service apache2 restart done, you can also use apachectl that also can used to control the service, we need to create the secret folder and add some html code that only authorize users can get to.

1

2

3

4

mkdir /var/www/html/secret

cd /var/www/html/secret

sudo touch index.html

sudo vim index.html

On that html I place the following lines:

1

2

3

4

5

6

7

8

9

10

11

<!DOCTYPE html>

<html lang="en" dir="ltr">

<head>

<meta charset="utf-8">

<title></title>

</head>

<body style="font-size:30px;color:blue;font-family:monospace;">

What I'm about to tell you is top secret. There's a powerful group of people out there that are secretly running the world. I'm talking about the guys, no one knows about the guys who are invisible. The top 1% of the top 1%. <b>The guys that play God without permission.</b> And now I think they're following me.

</body>

</html>

If we set all as needed it will work and ask for username and password, so let’s give it a try.

Figure 50 The Sign In message.

Figure 50 The Sign In message.

After you type the password you will get access to the secret folder.

We also can redirect user to other location, as example if someone ask for eliot folder he get mr.robot folder, to do that we just need to add the following line to the default conf file.

1

Redirect /eliot /mr.robot

You can see that I type eliot and it redirect me to mr.robot folder.

We use that redirect in case we change some of the files location or our webserver, so because our users know some location and don’t know about the new location we just set it up and redirect them to the new location of the files.

Let’s say that we want our server to contain several websites, we can do so with apache, there is a several way to accomplish that, we can use virtual hosts, that virtual hosts divided to two options, IP Based and Name Based.

With IP Based we setup to our server several IP address and if the client what to get some of them he get diffrent website from the other one.

With Name Based we setup several name to our server via the apache, so if the client request for that name he get one the page that related to this name, if he rquest for other name he get other webpage, we also can set that if the client didn’t requested for some name he will get the default page.

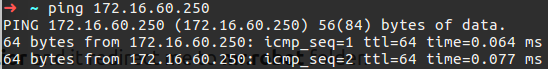

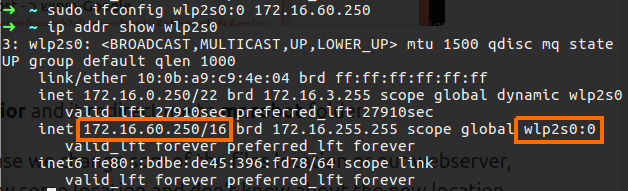

Let’s look first how to accomplish the IP Based option, we need at first setup several IP address to our machine, we can use ifconfig to do so:

1

sudo ifconfig eth0:0 172.16.60.250

This command will add to our local network interface the 172.16.60.250, please note that I use eth0 here, in my machine the network interface is wlp2s0 so I need to set wlp2s0:0 for my case, you can add more IP address by given other number etc.

Figure 52 My interface wlp2s0:0.

Figure 52 My interface wlp2s0:0.

You can use ip addr command for check that it set correctly. Now we can try to ping it

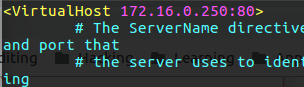

Now what we need is to make some changes on the default file in the sites-avialble folder, this is the same file we saw earlier for the authentication type, if you look on this file you can see on the top the <VirtualHost *:80> this line specefied the address for that web configuration, so in that case any client that try to connect to some of the IP addresses on our server with port 80 will get this section webpage.

So in that section we can specified new root directory that going to contain the webpage for client that try to connect to one of our IP addresses, for the other IP address we will create new section and specefied there new root directory for that section and this will direct client to this webpage only if thay try to connect to this second IP address.

Let’s say that I want to set two webserver, one is only the specefying IP address, which mean that if the client ask for that IP address webpage he will get my first webpage.

For the second page I will setup it as Name Based option, so only client that ask for that name will get that webserver.

On the /var/www/html/ I created two directory as follow:

1

2

sudo mkdir /var/www/html/webpage1

sudo mkdir /var/www/html/webpage2

On each I set index.html as follow:

1

2

3

4

5

#for web page 1

<h1>Welcome to WebPage1</h1>

#for web page 2

<h1>Welcome to WebPage2</h1>

Now I need to create the file that specified the IP and the file that specified the Name, so I run the following on the apache2 directory.

1

2

sudo cp sites-available/000-default.conf sites-available/ip.conf

sudo cp sites-available/000-default.conf sites-available/name.conf

So now I have the ip.conf file and name.conf file, let’s start with the ip file, on the top of that file I specified that IP address of the section that have the configuration for direct client the root page that locate on webpage1 folder.

So now inside that I need to change the root directory to be the webpage1, so the folowing line is what I change:

1

DocumentRoot /var/www/html/webpage1

I also change the server name for if any client will try to get webpage1 by name convention he will get that section that will give him the webpage1.

1

ServerName webpage1

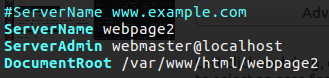

In the name file we want to make change only in the ServerName line to contain the webpage2, on the VirtualHost we save the name.conf with the * sign for listening to any destination ip address on port 80, and the ServerName is what we need to change.

1

ServerName webpage2

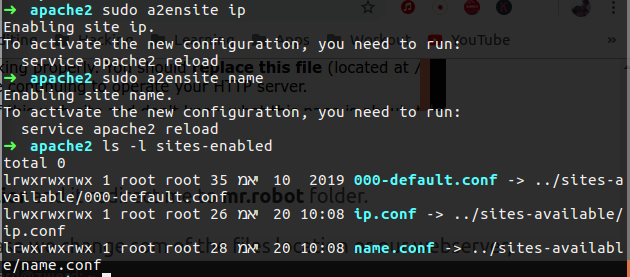

So now we need to enable our setting, for that we need add more symbolic links on the enable folder, but on Ubuntu we can use a2ensite this command can enable sites that we have on the sites-available folder.

Figure 56 My new enable sites and symbolic link.

Figure 56 My new enable sites and symbolic link.

You can see that I have new symbolic links on the sites-enable folder, also note that we need to reload the apache2 service for the configuration will take in place.

1

sudo service apache2 reload

Figure 57 The webpage1 is working.

Figure 57 The webpage1 is working.

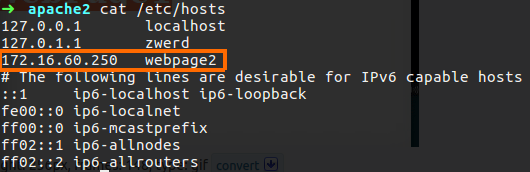

Please note that for webpage2 we need some new dns record or at least to change the host file for contain thet name because the webpage2 is based on name convention so we must have the way for the client to find out what is the IP address of that name.

Figure 58 The webpage2 on the hosts file.

Figure 58 The webpage2 on the hosts file.

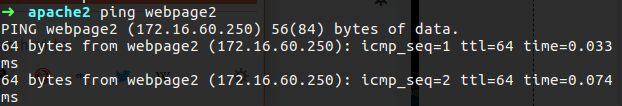

So now if I try to ping that I shuld get an answer that contain the 172.16.60.250 address.

Figure 59 The ping is working.

Figure 59 The ping is working.

So now I can test if by typing on my browser the webpage2 will bring me the webpage2 page. Also please note the the default is also not specified any IP address to listen so I change it to listen to 172.16.0.250.

Figure 60 My webpage2 is working.

Figure 60 My webpage2 is working.

You can see that I also use http for get to this page, if you didn’t specify http in the URL it’s likely the browser try to use the default search engine with the name you write on the URL bar.

If you have some issue with apache2 you can always check the service status and it may specified the error, if you need more details it a good idea that you check the logs itsef, you can find that on /var/logs/apache2/, that folder contain the access.log file which give you information about every connection that has done on this web server regarding to apache2, and error.log file which give you the error log about apache2 as example if you have some syntax mistyping it will be specified on that log.

If you want to use https which option encryption connection to the server using SSL, you can do so with apache2, but there is more thing that related to that like certificate and the encryption session so let’s look how it work.

The client trying to connect web server by using https, it actually open SSL connection, at first there is the 3 way hand shake in TCP and then he try to connect using https, the server will give him his public key that sign by some root authority that browser on the client trust, then the server send traffic down the client that encrypted by the server privet key, the browser on the client decrypt that by using the public key, all of that is for deny Man In The Middle attack that act like he is the server and give the client some cert, because the client has the root authority that he trust so in that case we will continue the session only with server that have certificate that sign with the root authority.

In our case we going to setup self-signed certificate and you will see on the client browser that he complain about that certificate, we also going to setup public and private key on our server.

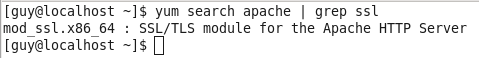

I am useing CentOS, so for install the ssl module we need to run the following:

1

yum search apache | grep ssl

This command will find the module name we need to install.

Figure 61 The mod_ssl is available for install.

Figure 61 The mod_ssl is available for install.

We need to run the following command for install ssl module on the server.

1

yum install mod_ssl

then if you check the httpd folder you will found the the ssl.conf file under conf.d folder, this was created by the mod_ssl package, this file contain the settings for the ssl on the apache2.

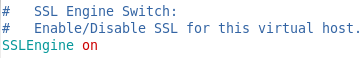

So we need to check the following, first the SSLEngine need to be on, this will activate the ssl on our server.

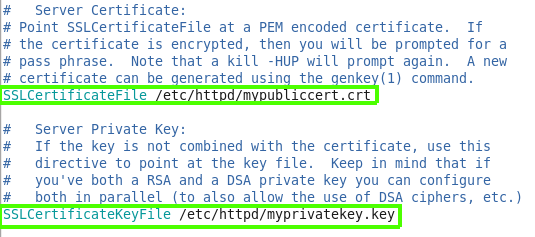

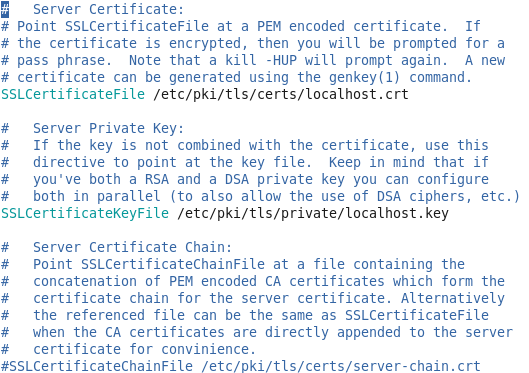

If you scroll down page you also find the certificate sections for the ssl, the server certificate, server private key and server certificate chain.

Figure 63 The certificate sections.

Figure 63 The certificate sections.

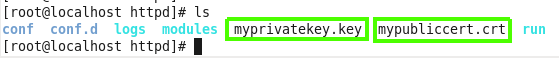

So we going to create crt which is the certificate file and specify it’s location on the SSLcertificateFile line, also we going to create privet key and specify it on SSLCertificateKeyFile, in the SSLCertificateChainFile we can specify the in intermediate CA certificate in case we realy want to sign our cert by CA, “intermediate certificate” mean that this is sign certificate by some trusted CA but not the root authority, it just intermediate CA that can sign on our cert, you can read more about that on the dnsimple.

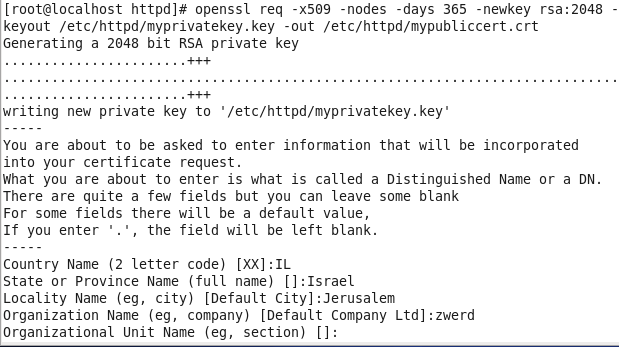

Figure 64 Using openssl for cert creation.

Figure 64 Using openssl for cert creation.

You can see the I run new request for X.509 cert so this is why I am using req option cent, please remember that there are several types of certificates, the X509 certificate comes with a certain structure and that’s what we are going to get out of this command, the -x509 is for the type of our certificate, The option -nodes is not the English word -nodes, but rather is “no DES”. When given as an argument, it means OpenSSL will not encrypt the private key in a PKCS#12 file, credit indiv.

We also setup the time for that cert will be vaild, in my case 365 days, this is new key so this is way -newkey, the type of it encription is RSA 2048 bit, and we specify the key name and cert name, all we need now is to give information on the cert and we done.

You can see that new files was created loclly.

So now we need to make changes on the ssl.conf file and we done.

All we need now is to restart the apache service by using the following

1

service httpd restart

Please remember that certificate that installed as part of your Linux distributions are usually installed on /etc/ssl Debian based or /etc/pki Red Hat based.

Before we trying to connect the web server, we need to create the html page, in my case on CentOS this need to locate at /var/www/html/index.html.

You can see that the browser is complain about the connection because the certificate is not sign by root authority server, but still the traffic is encrypted.

There is more settings that we can do regards to ssl.

SSLCACertificateFile This directive sets the all-in-one file where you can assemble the certificates of Certification Au- thorities (CA) whose clients you deal with. These are used for Client Authentication. Such a file is simply the concatenation of the various PEM-encoded certificate files, in order of preference.

SSLCACertificatePath Sets the directory where you keep the certificates of Certification Authorities (CAs) whose clients you deal with. These are used to verify the client certificate on Client Authentication.

SSLCipherSuite This complex directive uses a colon-separated cipher-spec string consisting of OpenSSL cipher specifica- tions to configure the Cipher Suite the client is permitted to negotiate in the SSL handshake phase. Notice that this directive can be used both in per-server and per-directory context. In per-server context it applies to the standard SSL handshake when a connection is established. In per-directory context it forces a SSL renegotiation with the reconfigured Cipher Suite after the HTTP request was read but before the HTTP response is sent.

SSLProtocol This directive can be used to control the SSL protocol flavors mod_ssl should use when establishing its server environment. Clients then can only connect with one of the provided protocols.

ServerSignature The ServerSignature directive allows the configuration of a trailing footer line under server-generated documents (error messages, mod_proxy ftp directory listings, mod_info output, …). The reason why you would want to enable such a footer line is that in a chain of proxies, the user often has no possibility to tell which of the chained servers actually produced a returned error message.

ServerTokens This directive controls whether the Server response header field which is sent back to clients includes minimal information, everything worth mentioning or somewhere in between. By default, the ServerTokens directive is set to Full. By declaring this (global) directive and setting it to Prod, the supplied information will be reduced to the bare minimum. During the first chapter of this subject the necessity for compiling Apache from source is mentioned. Modifying the Apache Server response header field values could be a scenario that requires modification of source code. This could very well be part of a server hardening process. As a result, the Apache server could provide different values as response header fields.

TraceEnable This directive overrides the behavior of TRACE for both the core server and mod_proxy. The default Tra ceEnable on permits TRACE requests per RFC 2616, which disallows any request body to accompany the request. TraceEnable off causes the core server and mod_proxy to return a 405 (method not allowed) error to the client. There is also the non-compliant setting extended which will allow message bodies to accompany the trace requests. This setting should only be used for debugging purposes. Despite what a security scan may say, the TRACE method is part of the HTTP/1.1 RFC 2616 specification and should therefore not be disabled without a specific reason.

For the LPIC2 202 we need also know how proxy work, proxy server is some middle server between us and the internet, by using proxy server every connection that related to web, is going through the proxy server, there is several reasons why to use proxy, the first one is that proxy server can cache webpages on local memory, so it’s mean that if someone on the organization want to get zwerd.com web pages, the proxy server will save these pages on it’s local memory and disk, if other person want to get the same information we will get it more fast because there is that data cache on the proxy server, the second reason is security, when connecting to some site, that site can contain some JavaScript or PHP code that can harm your system, the proxy server can contain some smart implementation that check on the application level every code that will run on our clients can block if found any malicious file or code, on LPIC2 202 we just need to get know Squid proxy server which bring the first reason why to use proxy, but remember that there is more other proxies solutions our there that considered the security issue and trying to solve it.

I am using ubuntu so for install Squid I just need to run the following:

1

sudo apt install squid

This is just metadata for squid, you can run squid3 which will install the squid3 version, but in the most of distros the Squid are in version 3 is it’s doesn’t really matter.

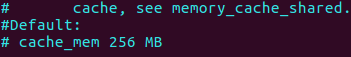

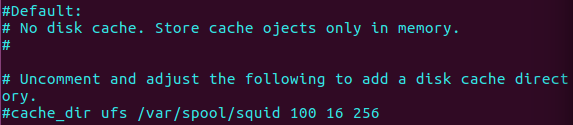

There is two settings that we can see on Squid, the first one is cache_mem, this is how much RAM to use for cache, just like that cache on your PC, the Squid do the same with the data he gets regards to queries, the second is cache_dir, this is the directories we cache on the disk, mean how much disk space to use for cache.

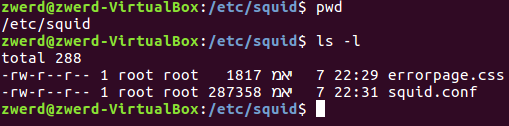

We can find these settings on the conf file of Squid.

The errorpage.css is the style for the error page we get from the local proxy, the squid.conf contain the settings we need to look at, this file contain meny settings and explanation, you can type /cache_mem on vim to find it more quickly.

You can see that by default the cache going to use 256MB of RAM for cache pages, so if we uncomment this line this will stay the default 256MB for use RAM.

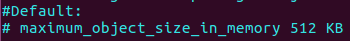

you also have the following line.  Figure 69 the max size for objects in squid settings.

Figure 69 the max size for objects in squid settings.

This line set the maximum memory in cache that one object can observe which in my case is 512 KB of RAM for single thing,

For file cache on the disk we need to search for cache_dir which contain the settings for max cache memory on the disk, just remember that is several types of “store type” which is the file type cache, the most known is the ufs store type, there is more store file like disk-d or rock store, but for LPIC2 we need to know about the ufs type and that this is the default store file.  Figure 70 the max size for disk cache in squid settings.

Figure 70 the max size for disk cache in squid settings.

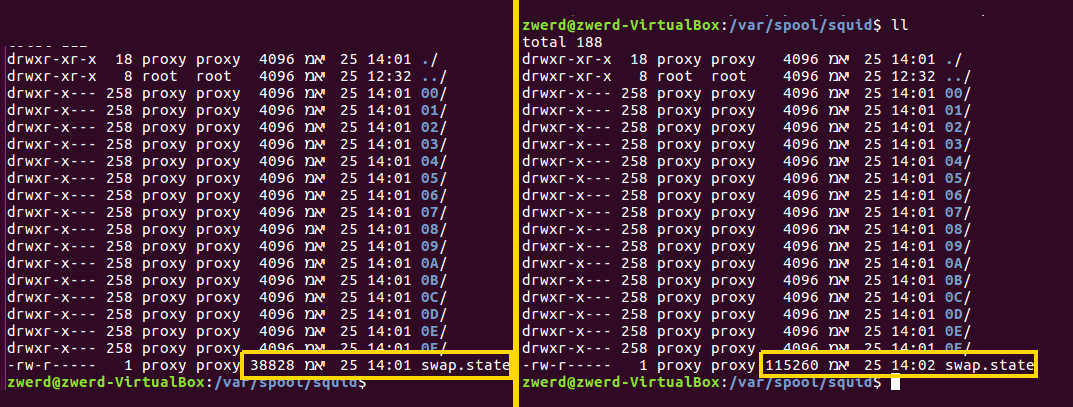

Please note that the default is not to store disk cache and store it only on memory, so we want to uncomment that line, we also can see that uft store type are in use, we stay it that way because it work, then the path to the store location, the first number is the maximum in MB to store on the disk, so in my case is 100MB, the next number is the how much folders can be created so it 16, and the last number is the sub-folders number which you can see is 256 folders, and all inside this one folder /var/spool/squid3.

After you finish your changes you need to restart the squid service.

1

sudo service squid3 restart

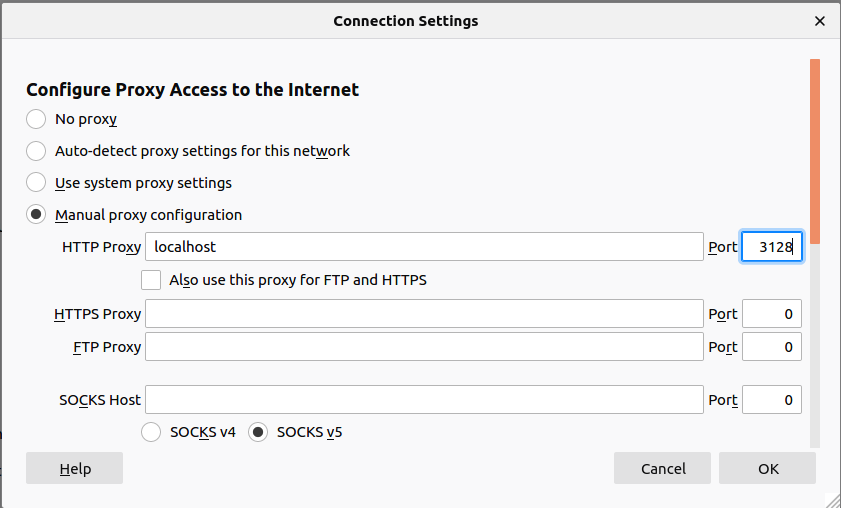

Now we need to test it out, so we can run our browser and set it to use local proxy, this is mean that every communication that we going to create throght the internet going to be using our proxy server.

Figure 71 The proxy settings on the browser.

Figure 71 The proxy settings on the browser.

So I set the localhost to be the proxy server with port 3128 which the default port of squid,

Now I run to zwerd.com and check the before/after size file in the squid store files, you can see the differences.

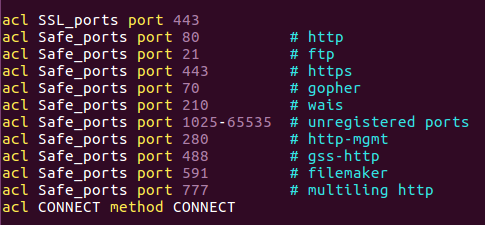

You also can see that there is 16 folders, if we try to look inside one of them we will find 256 folders, each. So far we saw the cache on squid, but squid can do more stuff, like setup some acl to deny or allow access to someone, the way the ACL on squid work is not so different from the ACL that exist on the networking world.

The ACL on squid is devided into two parts, the ACL elements and Access List.

On the ACL Elements we based our rule on the source IP or destination IP, domain, time in day or port, the Access List itself contain option like http_access that used for allow or deny the specified connection, always_direct that direct connection to the direct site or log_access that record or unrecorded logs about particular connection.

The settings for all of that can be found on the squid.conf, you need to search for TAG: acl to find that section more quickly.

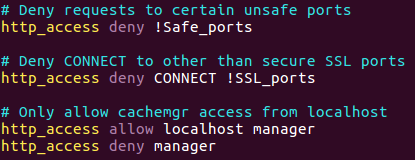

there is several example that are build in the squid by default so let’s look on that.

Figure 73 The ACL that come build in.

Figure 73 The ACL that come build in.

You can see that we have SSL_Ports this is the name for that acl, this acl catch every connection on port 443, please note that we doesn’t do any action on that list, we just specefying what we want to catch and after that we set the action for each of that list ACL names.

You can also see the Safe_ports ACL name that contain several port in each line like 80,21,443,70… so the action we will done on that ACL will be apply on every connection that use one of the ports listed.

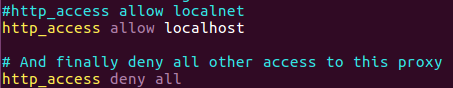

Now, if you scroll down on the conf file you will find the action section for your ACL.

You can see that the Safe_ports set on deny, but please note that there is an exclamation mark ! before the ACL name so it’s mean that every connection that doesn’t specified on that ACL going to deny, down below you can see some deny action for CONNECT and !SSL_ports so that mean that every connection that catch on the CONNECT and does not catch on SSL_ports going to deny.

Down you will find the following line:  Figure 75 The last ACL.

Figure 75 The last ACL.

1

http_access deny all

This line mean that all the other traffic that didn’t specified will deny, so if we want to add more rule action we need to place it before that line.

Also you can see that there is allow on localhost, this is specefied every connection on local host so if I want that my changes take place I need to locate it before that line.

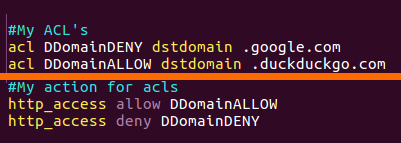

So in my access list every client that try to get google will denay, but if he try to get duckduckgo.com it will work.

Please note that I use port 80 over http (not https which is SSL on port 443) because there is a rule that allow such port.

There is a way to setup some ACL base on authentication, this is mean that the user need to type some username and password and if this user and password match to the list the client allow to connect the site.

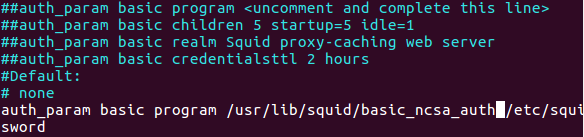

To do so we need to set the auth_param, this also can be found on the squid.conf file. By searching for that section it reavile that the default authentication is none so we need to setup it, the line we going to add is as follow:

1

auth_param basic program /usr/lib/squid/basic_ncsa_auth /etc/squid/password

The auth_param is what we going to setup the authentication, so this reference fo r th authentication settings, the basic is the dialog box that going to popup to the client screen and requerd the username and password for check that over the list. the program is the program we going to specefied to use for the authentication, please note that ncsa is the same type of password that we use for password protecting folder in apache, we can use others program but that is very simple one that I am going to use for the example here, the last path directory specified the file that contain the passwords, squid need to know where it store to compare clients typing to this file.

Figure 78 My auth_param line in squid.conf file.

Figure 78 My auth_param line in squid.conf file.

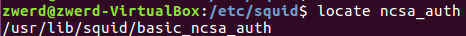

Please note that ncsa program store on different location in other distros, so you can search them by using find to see where it locate and specified that on the squid.conf file, you can also run locate if the database are update (if not just run before updatedb).  Figure 79 The ncsa file location.

Figure 79 The ncsa file location.

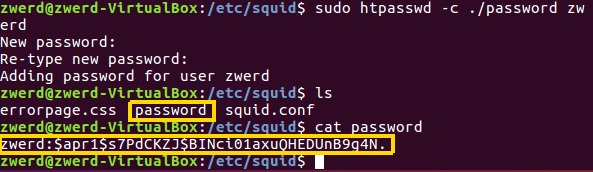

So it locate at the /usr/lib/squid/, you can see that this folder contain more authentication program that we can use if we want. We also need to create the password file we specified on the squid.conf file.

1

sudo htpasswd -c /etc/squid/password zwerd

This command going to ask us for password, the output is that file name we specified in that command. Please note that this htpasswd is part of apache2-utils.  Figure 80 Creating the password file using htpasswd.

Figure 80 Creating the password file using htpasswd.

You can see that it ask for retype the password for zwerd and that this file is contain the hash of that password.

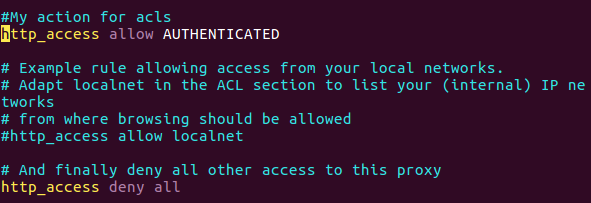

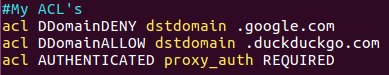

Now, the last thing we need is to add the ACL that used as reference for the authentication, so let’s open the squid.conf file angin and adding new line on the ACL section.

1

2

acl AUTHENTICATED proxy_auth REQUIRED

http_access allow AUTHENTICATED

Figure 81 Proxy authentication settings in acl.

Figure 81 Proxy authentication settings in acl.

You can see that I remove the last line I setup before and remove the allow one, all I left is the deny all and my authentication allow line.

This is time to check if it working right, what I expect to see is that the authentication is required, please don’t forget to restart the squid service.

You can see that I get the popup dialog that asking for usename and password right away that I open my browser, this is mean that our mission is accomplish.

Now, for LPIC2 202 exam we need to be familiar with Nginx, so far we saw the proxy server and how to use it by using squid, the nginx is no different except that proxy is used as reverse proxy.

Please note the diffrent, normal proxy is the server who control the traffic of client from the LAN to the WAN or Wide World Network, so it we can apply on that some ACL to allow or deny any trafic that go through that server, with reverse proxy the picture is the opposite of what we have seen so far, the client are in the WAN, and we have some server on our network that we bring online on the web and we need to give clients from the WAN a way to connect our server.

So you may think for your self that easy, we just need to get some public IP address for the web and that it.

But let’s say that we have more then one server that we need to bring it online, in that case we can use NAT or NAT/PAT that our external router going to observed and direct client from the WAN to our servers.

Reverse proxy work less or more the same way, it also have the ability to take connection base on name, so let’s say that client want to get hack.me site, and he write https://hack.me on the browser, we can give it the zwerd.com site and the client will never note that, so he think that it connected to the hack.me site when it actually connect to zwerd.com.

So let’s say how to setup and configure nginx, it look like apache2 on his configuration way but there is some differences on the syntax.

1

sudo apt install nginx

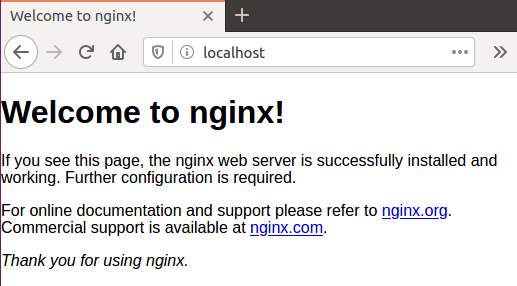

We just run that command and it will also configure all the stuff we need, so in the end of that we can open browser and see that it connected to the nginx machine.

Figure 84 The nginx default page.

Figure 84 The nginx default page.

The nginx location for that page can found /share/nginx/html/, please note that this diffrent then apache2 /var/www/html/.

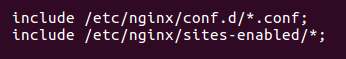

If you check the /etc/nginx/ folder, you will find several things that look like the same in apache2, the sites-available and sites-enable that can be use to contain the configuration for sites, also you can find the nginx.conf file that contain the configurations.

In the nginx.conf we can see that there is a reference to read all the configuration file in the conf.d folder and also the sites-enable.

Figure 85 The nginx default page.

Figure 85 The nginx default page.

On the sites-enable folder we have the default file that contain default configuration and we can lean from it the correct syntax for the nginx.

So, nginx is look like apache2, it have several things that make it more fast than apache2, but apache2 have more abilities that nginx haven’t.

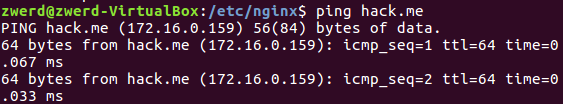

Let’s look how it’s work, we going to setup some configuration that point out to https://zwerd.github.io for anyone who try to get the https://hack.me, this is mean that the client will get zwerd.com instad of hack.me.

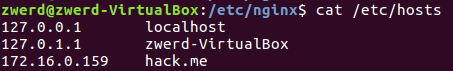

first lest set the hack.me locally in the host file.

Figure 86 You can see that the local ip address is set for hack.me.

Figure 86 You can see that the local ip address is set for hack.me.

So this is mean that every one who trying to get hack.me will get our local server.

Now what we need to do is to set new configuration file for hack.me, on that configuration file we need to include the proxy_params for that setup will work well, we need to create our new configuration in sites-avilable and enable it by create symbolic link on sites-enable, the configuration file need to contain the following:

1

2

3

4

5

6

7

8

9

server {

listen 80;

server name hack.me;

location / {

proxy_pass https://zwerd.com;

include /etc/nginx/proxy_params;

}

}

We set the server for listen to port 80, if there is any request to hack.me, the action would be the location section which contain the / (root) location for hack.me and give the client zwerd.com site, we also include the proxy_params, dont forgate the curly brackets at the end of the section.

Now we need to create symbolic link to the sites-available.

1

ln -s ../sites-available/hack.me

Please note that I run that command inside of sites-enable folder, now all we need to do is to restart the service and check if connection to hack.me actually give as the zwerd.com.

Figure 88 Web connection to hack.me which is zwerd.com.

Figure 88 Web connection to hack.me which is zwerd.com.

Please note that if you try that on your lab you can’t direct connection to my zwerd.com actual site, or youtube site or yahoo site, they disable such request because of the proxy_params, so you need to check the params for it to work right, in my example I set up the zwerd.com site locally, also note that the browser use this nginx proxy without or with port 80, this is not like squid that use 3128 port.

Chapter 2

Topic 209: File Sharing.

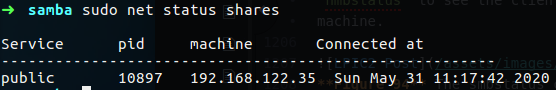

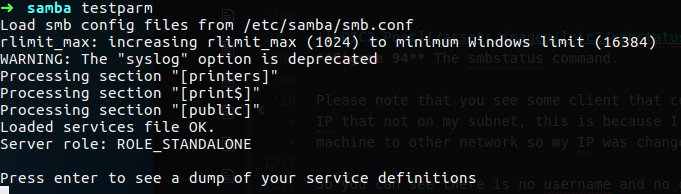

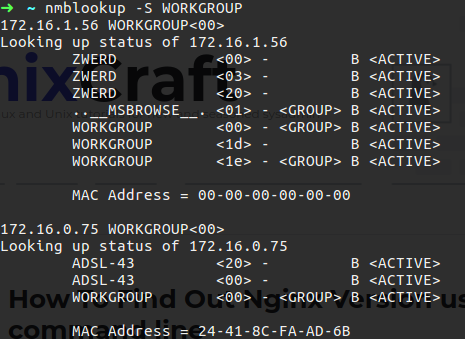

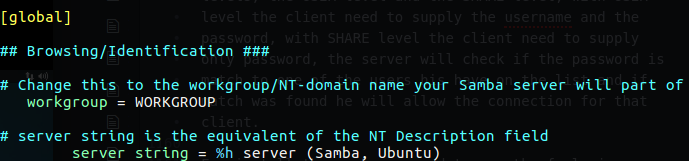

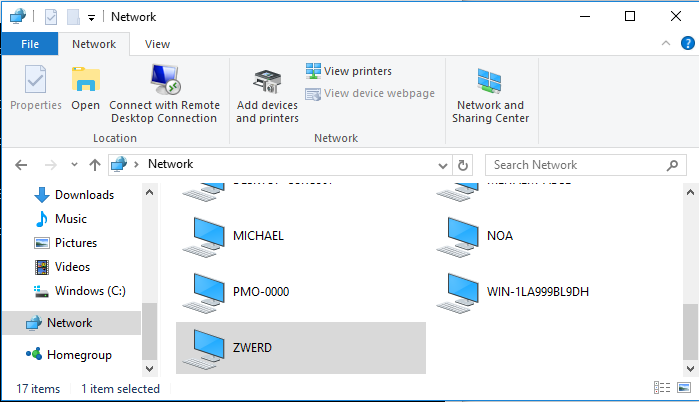

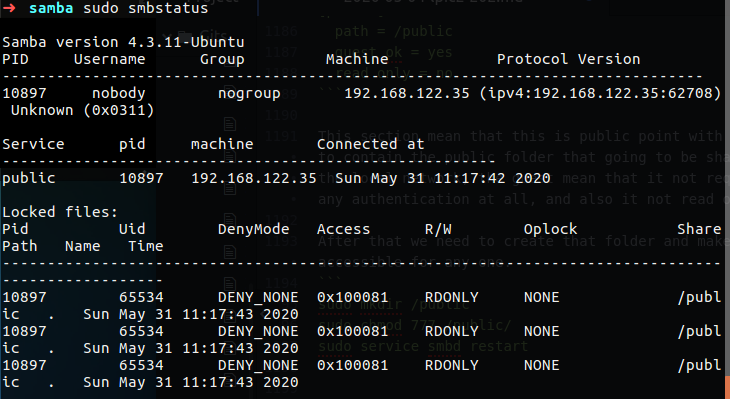

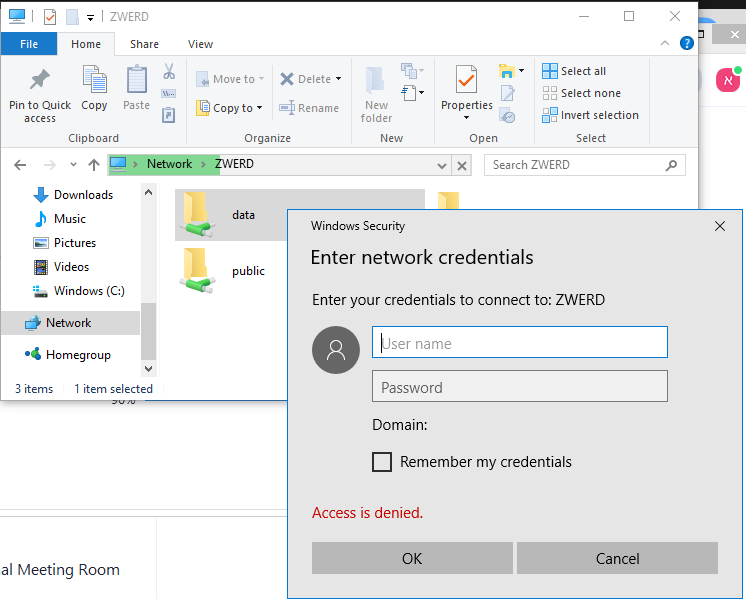

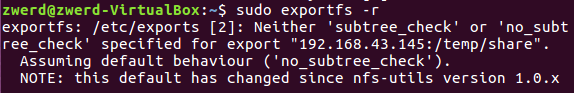

For the File Sharing part we should have be able to set up a Samba server for various client, by various I think that mean that our Samba server should have a way to get Linux and Windows client to connect our server using SMB.

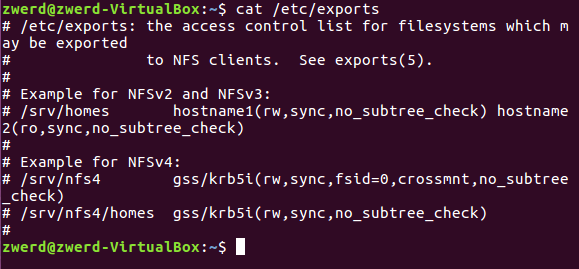

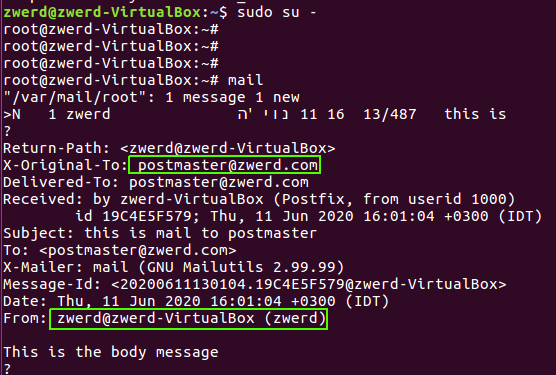

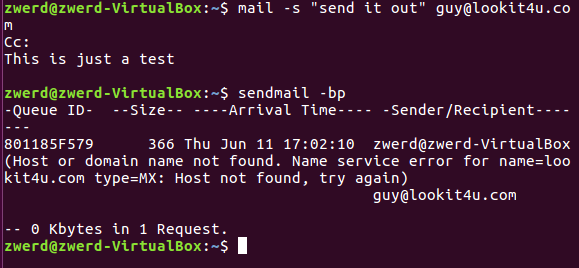

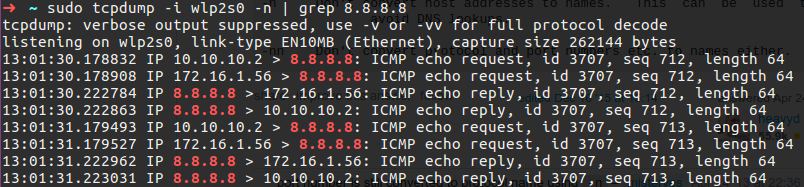

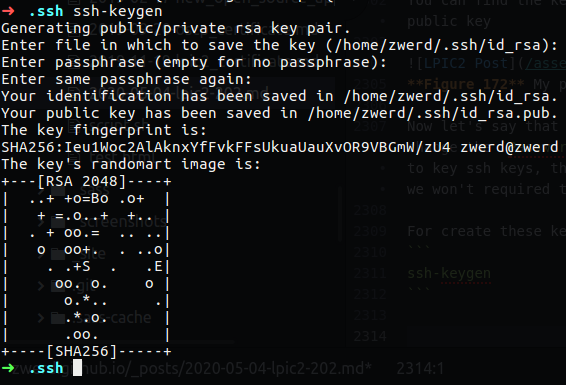

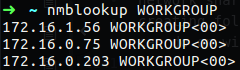

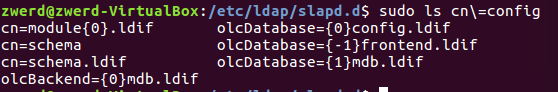

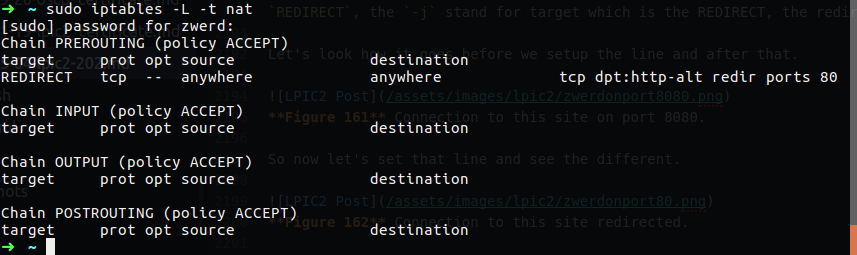

Samba Daemons is actually the windows server daemon that allow Linux server interact in the windows enviroment, when we talk about samba we need to know that there is two part of that, nmbd which is the name resolution for that file sharing, the smbd used for the actual file sharing, now please remember that smbd is daemon, there is also smbclient and this is just the client sort of agent that allow clients interact with file sharing server.